|

Our Hosting Structure and Security |

|---|

|

Our Hosting Structure and Security

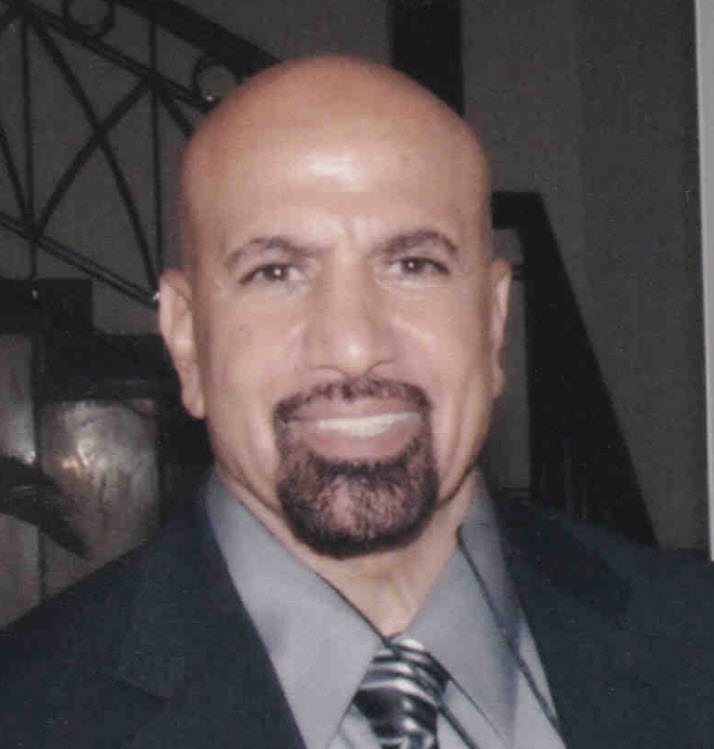

Introduction: Currently, Hosting Security is the core in any existing system, and hackers are relentless on achieving their goals and doing damages. Sadly, hackers' scope is very small plus it seems that hackers have endless resources and support. Not to mention, hackers are using Artificial intelligence (AI) as one of their arsenals. Therefore, our security goal(s) is to help in adding features to any hosting to prevent hacking and secure the hosting within a reasonable cost. We are presenting the hosting features of AWS, AWS's competitors such Microsoft Azure, Google Cloud or any hosting services and let the world compares and decides between them and our Our Homegrown Hosting Features. We believe that Our Hosting Strategies, Structure, Tools and Products present a new way of thinking and address the core of Hosting Security. Our Homegrown Hosting Features are addressing the use of AI by hackers and the race of how to offset these hacker's AI tools. Our Homegrown Hosting Features reduce the cost of hosting in term of time, monetary value, efforts and reusability. Which hosting is better AWS, Azure or Google Cloud? What are the top cloud providers? Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP) are the big three cloud service providers today. Together, they take up 66% of the worldwide cloud infrastructure market, an increase from 63% the previous year, according to Synergy Research Group. AWS vs Google Cloud Platform (GCP): The biggest difference between AWS and GCP is size. AWS offers over 240 cloud products while GCP delivers about 150. Plus AWS has over 33% of the cloud services market compared to GCP's 11%. Azure vs Google Cloud Platform (GCP): Azure has a broader global infrastructure, spanning 60 regions, offering low-latency access and more region pairs than Google Cloud. Azure excels in enterprise-focused infrastructure and platform services, while Google Cloud prioritizes cloud-native approaches with leadership in containers and serverless. Common or Standard (Vanilla) Hosting Features: The following are hosting essential which they should be considered when determining if a web host is secure: 1. Secure Information Transfer 2. Firewalls 3. Physical Server Security 4. Network and Resource Monitoring 5. Domain Name Security 6. Type of Hosting Service 7. Compression and Encryption support 8. Malware Detection and Removal 9. Backups 10. DDoS Protection 11. Hosting Management 12. Customer Support 13. Physical Security What Services Does AWS Host? Amazon Web Services offers a broad set of global cloud-based products including compute, storage, databases, analytics, networking, mobile, developer tools, management tools, IoT, security, and enterprise applications: on-demand, available in seconds, with pay-as-you-go pricing. AWS Security Hosting Features: According to our internet search: AWS Architecture Diagram Image presents AWS's recommended architecture for Web Application Hosting.

AWS Architecture Diagram The following table presents AWS brief features descriptions:

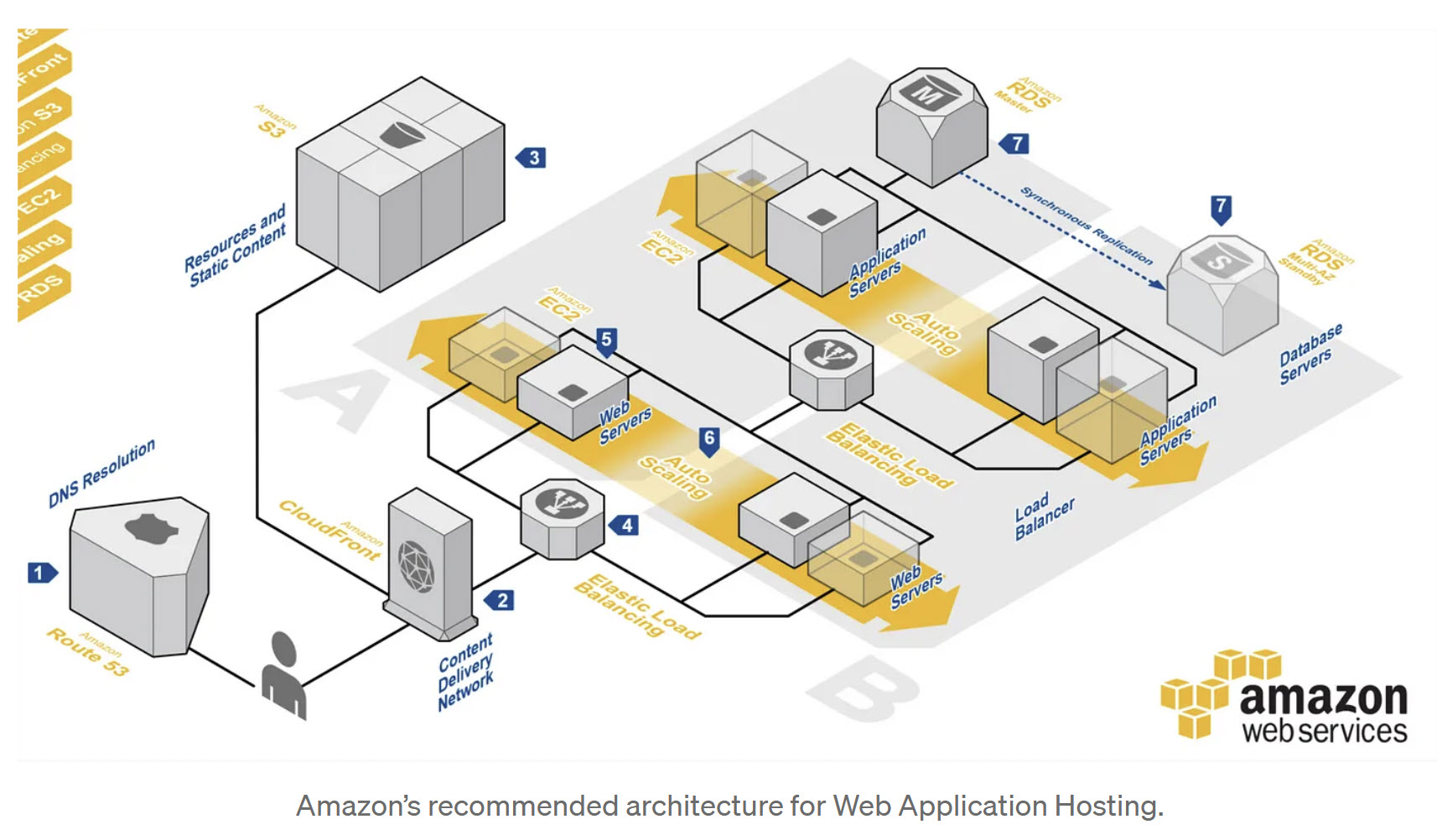

How is The Performance of all AWS security Features? We cannot answer such a question, only AWS clients would be able to answer such question. There must be a benchmark for AWS security services to be able to compare them with AWS competitors. What are the main features of Azure? The key features of Microsoft Azure include secure storage, scalability, reliability, diverse data handling, and advanced analytics capabilities. Azure's integration with artificial intelligence (AI) and machine learning (ML) services empowers businesses to leverage advanced analytics and automation capabilities. Azure Security Hosting Features: According to our internet search: Azure Architecture Diagram Image presents Azure's architecture for Web Application Hosting.

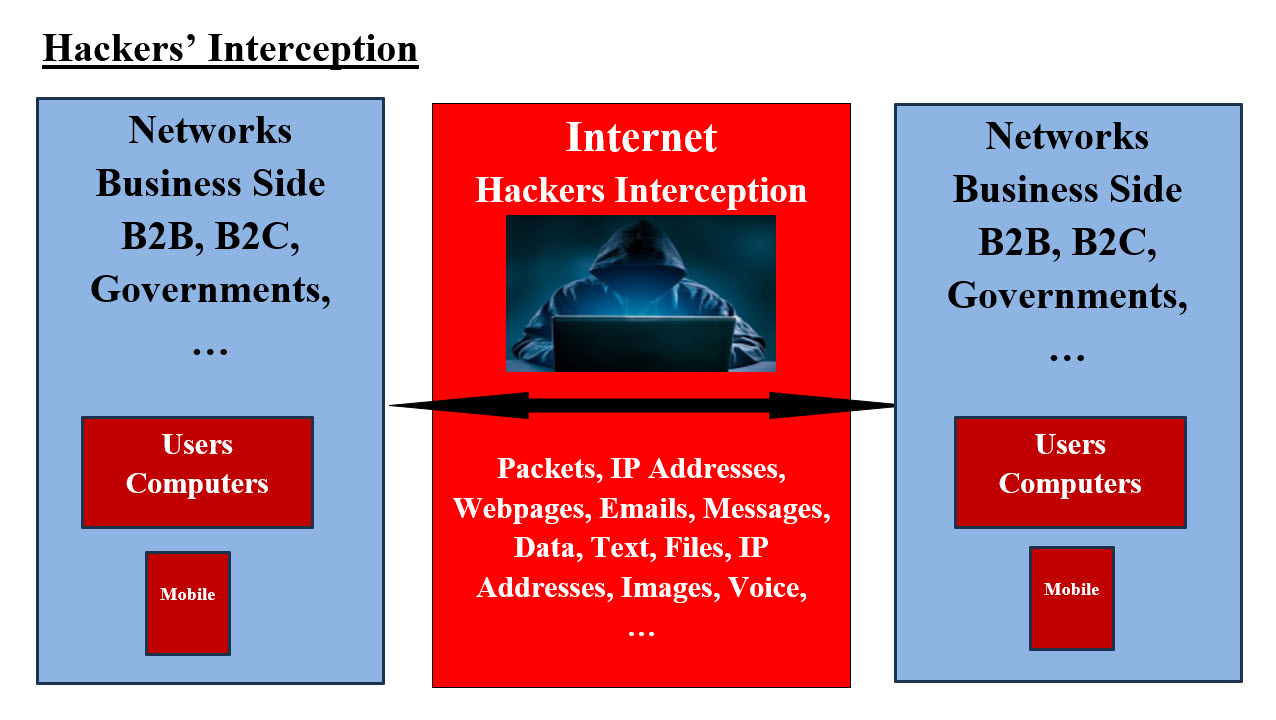

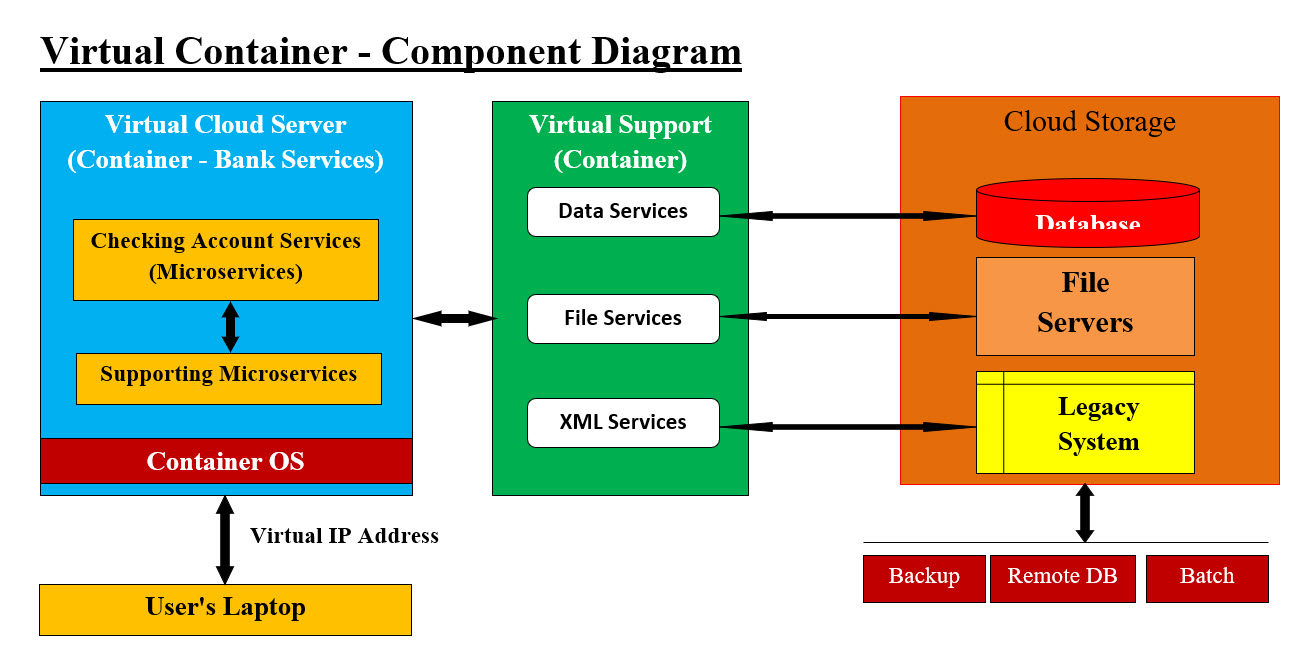

Asure Architecture Diagram 1. Application Security 2. Data Security 3. Host Security 4. Storage Security 5. Network security 6. Identity and Access Management 7. Governance 8. Monitoring How is The Performance of all Azure security Features? We cannot answer such a question, only Azure's clients would be able to answer such question. There must be a benchmark for AWS security services to be able to compare them with AWS competitors. Our Homegrown Security Hosting Features: Our Homegrown Security Features are more of a list of options for anyone to add to their existing hosting. Our homegrown options are very easy to implement. They can replace AWS, Azure or any expensive hosting all together. Most companies have their own infrastructure departments, but sadly they still use AWS and Azure. We are presenting options for long term development of reusable secured customized Containers-Components which would reduce and cut the cost of any expensive hosting. Hackers' Capabilities and The Use of Artificial Intelligence (AI) and Machine Learning (ML): It is very important that our audience have a picture of the hackers' capabilities and what they can access on the internet. Image #1 is a rough presentation of what can hackers intercept on the internet between users' computers, business networks as well as mobile devices.

Hackers Intercepts - Image #1 Image #1 presents a quick picture of what hacker can intercept: Packets, IP Addresses, Webpages, Emails, Messages, Data, Text, Files, IP Addresses, Images, Voice, ... etc. PC and Hackers: We are presenting what hackers can access any users' PCs, or laptops: 1. Have a copy of the Cookies 2. Intercept packets 3. Track user's keystrokes 4. See users' screens 5. Run users' system 6. Run operating system 7. Educate themselves on users' habits and history of users' actions using Cache 8. Use the users' computers to attack other system 9. Use Reverse engineering to learn about applications plus hackers can add their malicious code 10. Use Artificial Intelligence to hack 11. Use Machine Learning to hack Assumption and Methodology: With the assumption that hackers can: 1. See (screenshots) what users are doing 2. Listen (keyboard strokes) 3. Run the show (run their code) 4. Know how your applications and operating systems work and manipulate them (Reverse Engineering) 5. Know your habits and tendencies (tracking Cache and Cookies) 6. Track data (Packets stiffening) 7. Hide within victims' systems 8. ... etc. Hackers can also alter-change what they intercept on the internet and can cause damages to the content of packets, files, messages, email, ...etc. They can overwhelm the networks with bogus messages and calls. Man in the Middle and Distributed-Denial-of-Service (DDoS) attacks which flood systems, servers, or networks with traffic to exhaust resources and bandwidth. How hackers use AI in their attack? With the same logic for AI in security, also hackers can use AI to automate their hacking job plus understand and overcome or defeat detections, prevention, remedy and human behavior. Automated Attacks: Hackers utilize AI algorithms to automate attacks, increasing speed, scalability, and sophistication. Phishing and Social Engineering: AI-powered tools analyze vast amounts of data to create personalized phishing emails, messages, and chatbots, making scams more convincing. How can AI be used in cybersecurity? AI-powered solutions can sift through vast amounts of data to identify abnormal behavior and detect malicious activity, such as a new zero-day attack. AI can also automate many security processes, such as patch management, making staying on top of your cyber security needs easier. Our Hosting Strategies, Structure, Tools and Products: Looking at the key features for both AWS and Microsoft Azure include secure storage, scalability, reliability, diverse data handling, and advanced analytics capabilities. They use artificial intelligence (AI) and machine learning (ML) services empowers businesses to leverage advanced analytics and automation capabilities. They also secure: Applications, Data, Host Storage, Networks, Identity and Access Management, Governance and Monitoring. Static vs Dynamic: Our definition of Static vs Dynamic is: Static = stationary = setting duck for target practices Dynamic = energetic or forceful = what-when-where-how to attack

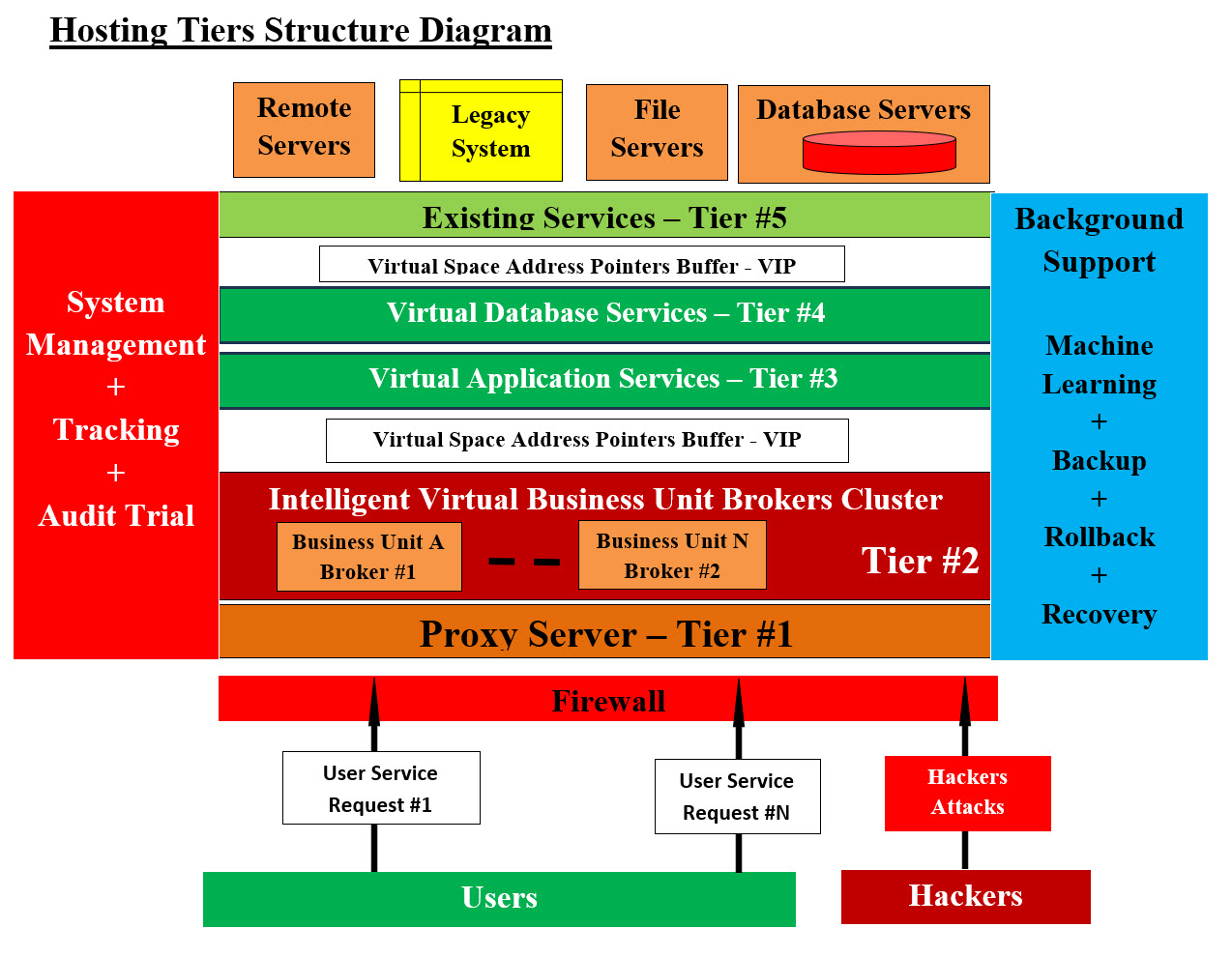

We do not want to sound sarcastic, but looking at AWS and Azure Features Table, there is nothing dynamic about their features. The only dynamic processes are the control of the cryptographic keys that are used to protect your data. Strategies: We need more than one strategy to offset the hackers' interceptions and their physical damages to internet traffic. 1. Keep Hackers Busy Looking for Our System Doors - The Tiger and Duck: Our strategy is keeping external and internal hackers and intruders busy trying to figure how to access and attack our system. In the cases of Man in the Middle and Distributed-Denial-of-Service (DDoS) attacks, our VMs and their respective VIP would be dynamically and periodically change and their attacks would have to start all over, plus they would have to figure out where they would direct their attacks. The only analogy which helps our readers and audience envision our approach is: There was or still is a YouTube Video about a tiger, a duck and a small swimming pond for the tiger. This video summarizes some of our strategies and approaches. The video is about a tiger was chasing a small duck in a small water pond, where the small duck would dive under water and disappear, but it would come up to the water surface someplace else in the pond. The tiger would keep chasing the small duck with no success. 2. Approaches: We will be presenting approaches are some of our defenses which can be applied with reasonable effort and resources. What we mean by reasonable is doable and not a rocket science. 3. Keep Hackers in the Dark: Our main strategy is to keep the hackers in the dark and not knowing anything about our dynamic network structure, nor figure out our approaches and tools. We need to create an intelligent virtual dynamic system which is constantly changing and keep hackers not knowing what-when-where-how to attack. 4. Synchronization of System Access with Our Clients - Time and IP addresses: We would be developing synchronization schedules and browsers' redirections to keep hackers from knowing what IP or VIP address would be accessible and available in the next time (minutes-hours-days) for clients and visitors use. Our browsers support software would be able to figure the VIP address which the browsers need to be redirected to next (time dependent). Our Hosting Structure: We are architecting-designing a multiple virtual tiers or layers-levels system which would be a buffer to any existing system. See Hosting Tiers Structure Diagram Image for our Hosting Tiers Structure Architect-Design.

Our Hosting Tiers Structure Diagram Users' Side and Cloud's Side: Which side would the execution of supporting software and Apps would be running? It is very important for our audience to know where would the applications or the apps be running? 1. User's Side (Visitor's Machine) 2. Cloud Server Hackers can only interrupt the running applications or run their malicious code. Therefore, our Hosting Structure is architected-designed to keep the hacker's code from running. As for the interruption, our architect runs in virtual machine (VM) which would be terminated and all the code within VMs would be wiped off including the hackers' code. The creation and deletion of all the VMs are also created-executed-terminated in very short time. The VIP addresses of the proxy servers and the brokers servers would be periodically changed and hackers' attacks would be attacking virtual servers that are no longer running nor exist. Virtual Thinking: To simply Virtualization and Virtual Machines (VMs), we need our audience to view Virtual Machines (VMs) the same way they would view file folders. Any file folder can have files, subfolders, or be part of a folder structure. We can create any number of folders on the run as well as delete them on the run also. The same concept can be used with VMs. The creation and deletion of VMs are very much the same as folders. The only difference between folders and VMs is a VM is a container with all needed processes including operating system, the needed application and resources. VMs exist in memory and can be saved to hard drive as a hardcopy. Recreating VMs from their respective hardcopy from the hard drive can be done easily and very fast. Restoring the VM is simply copying the VM hardcopy back to memory. We are making these saving and restoring processes part of rollback and recovery. The following table has the list of our system tiers and tools, methodologies and approaches:

Some of Our Tools and Approaches: "There is more than one way to skin a cat." We are presenting some of our tools, approaches, templates, designs, ... etc to help our team to be on the same page. We are also open to other team members' ideas, tools and approaches, templates, designs, ... etc. Containers-Components: We had addressed Containers-Components in other pages and for the sake of being consistent, we are posting them again: The best way to explain the difference between Component and Container is the example of creating a virtual server (container) where applications (components) as well as virtual data storage (components) are the components running withing the virtual server (container). Therefore, a container would have components run within it. Another Example: A Virtual Private Server Hosting (Container) provides a virtualized environment (Component) for the website, which is isolated from other accounts on the same server. Within the virtual environment (which can also be a container) run a number of applications (components). The website can also be structured into Containers and Components. Containers-Components Structure: It is critical to start thinking in term of containers and components in order to structure any hosting as services of Containers and Components Microservices vs. Monolithic Architecture: What is microservices? Microservices are an architectural and organizational approach to software development where software is composed of small independent services that communicate over well-defined APIs. In software engineering, a microservice architecture is an architectural pattern that arranges an application as a collection of loosely coupled, fine-grained services, communicating through lightweight protocols. One of its goals is that teams can develop and deploy their services independently of others. What is monolithic architecture? A monolithic architecture is a traditional model of a software program, which is built as a unified unit that is self-contained and independent from other applications. The word "monolith" is often attributed to something large and glacial, which isn't far from the truth of a monolith architecture for software design. In software engineering, a monolithic application is a single unified software application which is self-contained and independent from other applications, but typically lacks flexibility. Our Answer to both Microservices and Monolithic Architectures: Regardless the existing development environment (Microservices or Monolithic Architecture), our Virtual Intelligent Brokers Units approaches would be able to accommodate any existing or newly developed system. Our Virtual Container-Component approach would able to create the virtual environment for any system regardless if it is Microservices or Monolithic Architecture. Virtual Space:

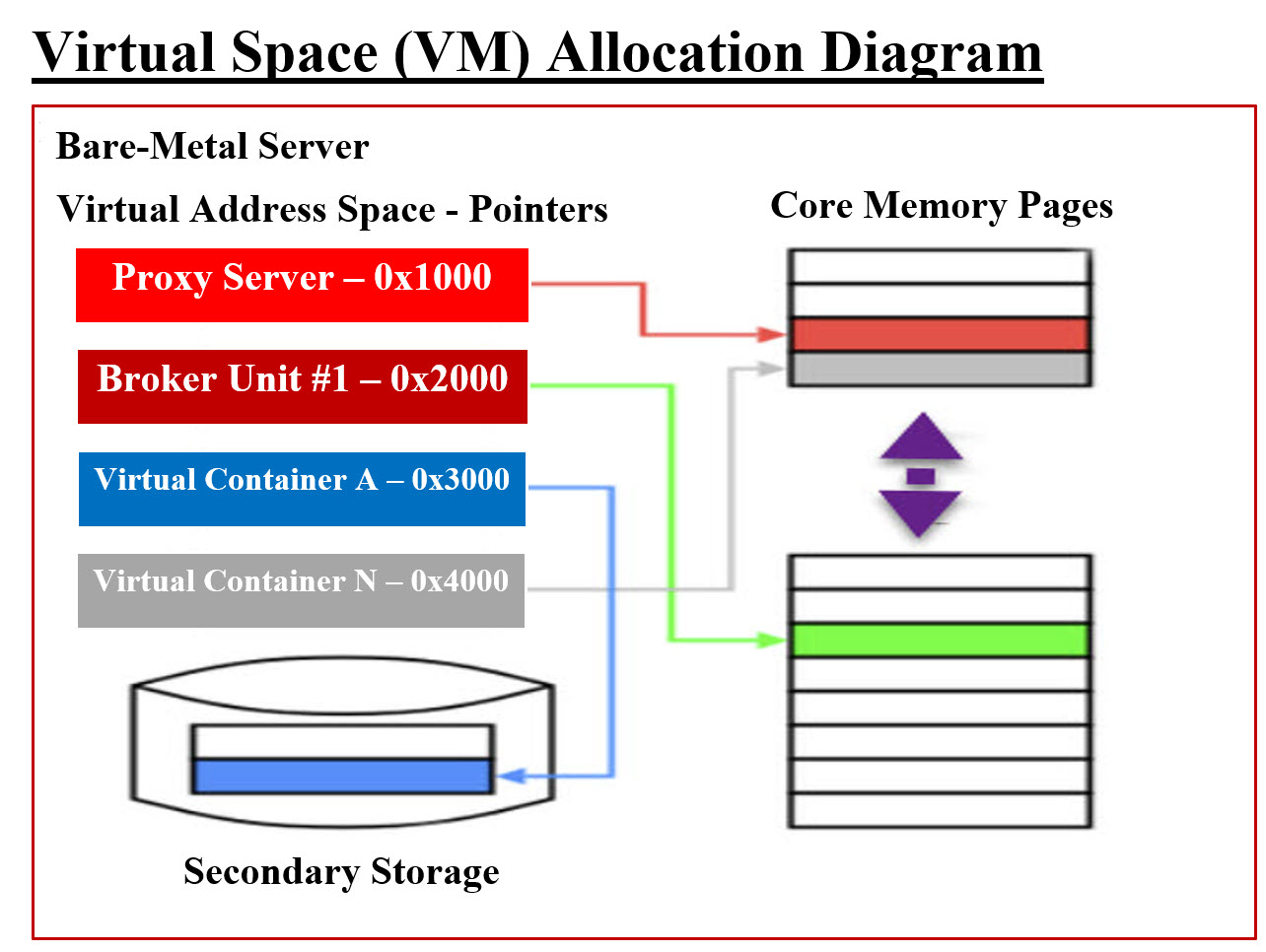

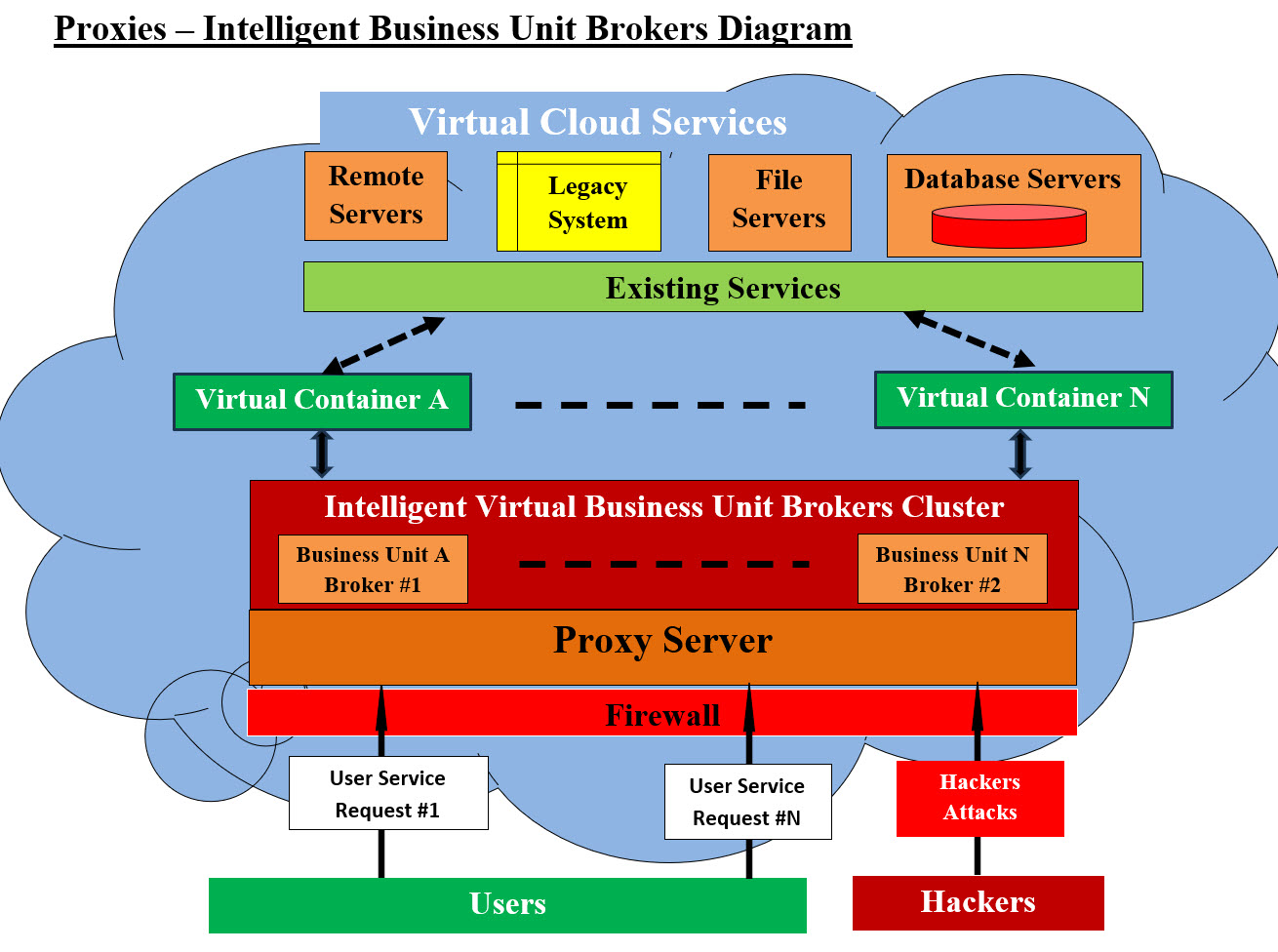

Virtual Space Allocation - Image #2 Internet Virtual Space Definition: Virtual Spaces are where real-time interaction happens online. Whether we are working or playing, we live and interact within online Virtual Spaces. A Virtual Space is a digital environment where people and devices, around the world, can seamlessly collaborate, socialize, and exchange information...as they happen. Our Definition of Virtual space: Our Definition of Virtual space is shown in Image #2 where VMs created and freed on the server's memory. These VMs can only be accessed by memory pointers created by Operating system or Hypervisors. Looking at Image #2 and #3, our attempt here is to present how the system VMs (containers) would dynamically be created on a bare-metal server and their actual locations can only be accessed by the memory pointers to these VMs. These VMs would also be destroyed and their memory space would be recycled for new VMs. All the listed VM which would be created dynamically for: 1. Proxy Server(s) 2. Intelligent Business Unit Broker Unites Servers 3. Dynamic creation of VM-Container-Components which are created from the existing services Hypervisor: Hypervisor is a special kind of software to allocate resources. The hypervisor interacts directly with the physical server and works as a platform for each virtual servers operating system. OS Level Virtualization: OS level virtualization does not even use a hypervisor at all. Virtualization capability is a part of the host OS which performs that kind of functions that a fully virtualized hypervisor would. Our Proxies-Intelligent Business Unit Brokers: The best way to present Our Proxies-Intelligent Business Unit Brokers is by looking at Image #3, where a cluster of virtual Proxies servers acting as Intelligent Business Unit Brokers. Each Intelligent Business Unit Broker is an independent virtual server-Container-VM. Each is responsible of addressing a specific business cloud request for a targeted service regardless if the service structure (Microservices or Monolithic). In short, we have loosely coupled services which run independently of other services. We also can develop an independent proxy server(s) as a security buffer to redirect any request to the proper Intelligent Business Unit.

Virtual Proxies-Intelligent Business Unit Brokers- Image #3 Our Intelligent Business Unit Brokers: Our Intelligent Business Unit Brokers have the job of creating the service virtual container-components. Each manages and tracks the performance of the requested services (Legacy included). It tracks the services and gives them a grade based how easy to run, performance, CPU usage, memory usage, testing, storage, security, rollback, reusability, ... etc. It creates Rollback options and handles the dynamic vertical and horizontal scaling. The following is an example of Our Intelligent Business Unit Broker as bank services.

Bank Service Application (Microservers) Image Bank Service Application (Microservers) Image presents a rough draft of a Container-Component structure, where there are two virtual severs. The first virtual cloud server (containers) has a bank service application (Microservers) and all its supporting software. The virtual server or Virtual Machine (VM) - a container - has its own Operation system and resources. As for the second virtual cloud server-container has all the supporting data services applications. All the services are graded to help create a report about any existing (old, new, Legacy, remote, ... etc) in case of services which would need updating in term of performance and service. Any Unit Broker also helps with audit trail of all the requests and their users. Cloud Tiers: Image #3 presents the following tiers: 1. Existing system which would include Legacy System, databases, filing system, remote services, ... etc. 2. Existing services 3. Dynamic creation of VM-Container-Components which are created from the existing services 4. A cluster of Intelligent Virtual Business Unit Brokers 5. Proxy Servers to handle outside requests 6. Firewalls Security Buffers: There is three security buffers which are created in the system virtual space where hackers need to figure out and copy their malicious code into them. Virtualization Plus DevOps Support: Image #2 and #3 presents a rough draft of a bare-metal server which would be used to create all the dynamically allocated VMs (containers). DevOps or the Infrastructure Department would be the responsible party in the creation of these services and their supportive software and resources. Such creation, destruction-deletion and recycling must be automated and intelligent. Production VMs and DevOps Services: The production load handling would require DevOps or the Infrastructure Department to have work plans and run a number of statistics on the actual production loads. The number of VMs and their supportive bare-metal servers would have to available for any production(s) and the testing of any running system(s). Machine Learning (ML) and Audit Trial: Machine Learning (ML) and Audit Trial would be running in the background to help in decision-making and tracking of system users and system performance. Security Concerns and Hackers' Attacks: The number of VMs created-destroyed and recycled and their memory space are wiped clean from all containers and components would make hackers and their attacks and code of no value nor can do anything to the system. Dynamic Virtual IP addresses: Looking at Image #2, every VM-Container created would be accessed using memory pointer which would be translated to a VIP address for handling any request. Virtual Address: A virtual address space or address space is the set of ranges of virtual addresses that an operating system makes available to a process. VIP Addresses: A virtual IP address (VIP or VIPA) is an IP address that doesn't correspond to an actual physical network interface. These VIPs are memeory pointers which translated into the network VIP - see Image #2. Virtual Strategies: The main strategy is to provide dynamic services which answer user requests. In short, we would create a virtual Stateless Request and Response. This approach would require data synchronization and buffering. We would create a virtual server which would be a dedicated virtual server to handle the request. It would respond back with the requested services. Simply put:

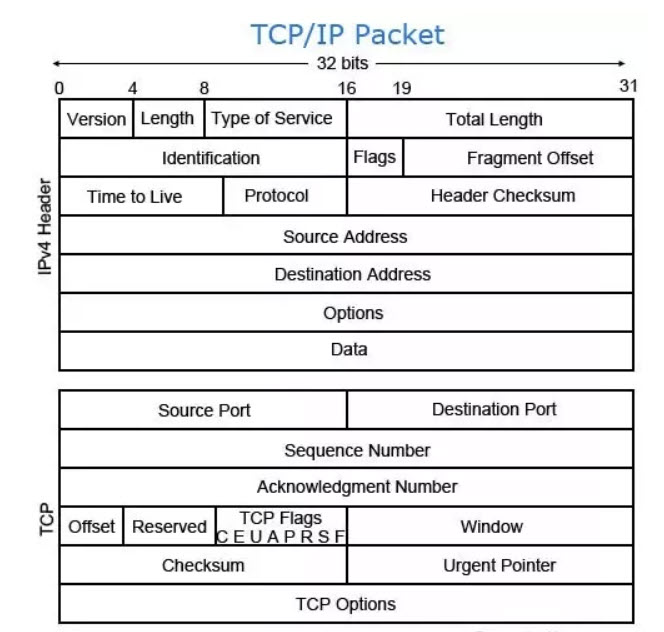

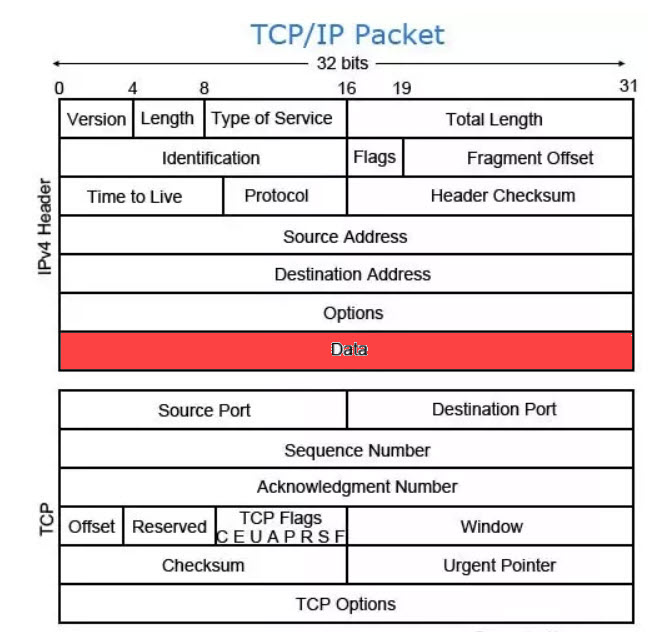

This can only be achieved using VMs to handle every request. Compression-Encryption Browser Support Software Introduction: Compression-Decompression, Encryption-Decryption plus other means are used by cybersecurity experts plus hackers to protect or convert data into a puzzle to prevent anyone from using the data if the data is captured. Ransome hackers use such tactics. There is a lot of vendors and institutions that had built their own such as Google, Amazon, Facebook, YouTube and so on to prevent hackers from creating issues with data usage and data transport. Another issue is that hackers can damage the transport data in the packets or by other means. Therefore, it is very important to protect the data transport and prevent the resending the data in case of any data damages. We need a number of methodologies to ensure the integrity or correctness of the data being transported. Sadly, the use of AI, Machine Learning and Reverse Engineering gave hackers an edge which would be used to figure out the encryption-compression. This is another added burden Cybersecurity experts would have to handle. Our use of the computer time clock (which hopefully is universal by all computer users and companies) is another key. In short, if currently the computer clock is equal to: XCVB (in milliseconds) then any number less than XCVB would raise a red flag. This means if the time is two days old, or some meaningless number, then we do have an issue. Our Goals: Our goal is to develop a simple dynamic homegrown automated and intelligent system with rollback to encrypt-decrypt-compress-depress and tag the data with indices for correctness-integrity. We need to brainstorm such development and we will try to present our simplified approaches to our audience and future partners. To make life tougher for anyone who captures our data, we literally have to make the stollen data of no value. We need to build in our development a number of dynamic factors-variable which are tough to figure out. Also add time element which would change with computer clock. The following is a small sample, for our audience so we do not overwhelm them of too much technical jargons. The key ingredients are: 1. Use numbering system - turn everything to numbers 2. Dynamic 3. Intelligent 4. Timestamp 5. Trackable 6. Reversable 7. Machine Learning 8. Use Indices 9. Hashed 10. Delimiters index 11. Template driven 12. Navigation - using location (server-country) in the key building 13. Rollback 14. Audit Trail 15. Integrateable Example: Presenting any real-time example with a running model would be hard to do, but we would be presenting our approaches and thinking which are simple and doable. Our approaches and thinking have a number of dynamic variables as keys such as the computer clock as a timestamp. The key is that there will be constant changes, so hackers would not have time to figure out the next move. Hackers use AI and Machine Learning plus Reverse Engineering to get the logic and code. We need to be ahead of the hackers. HTML Code for Hello world: Our main approach is converting things into numbers or integers. Such numbers can be easily manipulated and formatted into any number of format or values. Let us look at how would convert HTML "Hello World" code into a string of numbers and delimiters. Such string is self-encoded which senders and receivers would be the only ones know what to do even if the hackers have used Reverse Engineering to figure out the logic and processes. "<"B">"Hello World"<"/B">" Integer Conversions: "<" = 60 "B" = 66 ">" = 62 "H" = 72 … Delimiter char: %%% We can create a text string of numbers using integer and delimiters: Start_Code%%%Timestamp%%%EncyptCode%%%DeComp%%%60%%%66%%%62%%%72%%%...%%%End_Code What do extensions, add-ons and plug-ins do? Applications and browsers support extensions, add-ons, and plug-ins are used for multiple reasons which allow third-party developers to: Ceate specific functions Easily add new features Expand functionality Legitimate application extensions and plug-ins include add-ons that can encrypt and decrypt messages and so on ..., Dynamic webpage building: What technology causes a web page to be dynamic? Web pages that use server-side scripting are often created with the help of server-side languages such as PHP, Perl, ASP, JSP, JavaScript, ColdFusion and other languages. These server-side languages typically use the Common Gateway Interface (CGI) to produce dynamic web pages. Dynamic Processes: Our dynamic processes would be developed to create any number of webpages as files (can JSON). Each file is composed of: Decompression Keys The webpage content Compressed ID key based on timestamp - hackers would be able to recreate even if they have the code Return Key for the next request The next second request would be receiving another totally different set of keys and code which would be based on the computer clock. Browser-Side-Support Software would be parsing the compressed file sent from the server and start decode and decompress the webpage file it received and build the requested webpage. We would be using template to build almost infinite number of code, keys, indices, timestamps, plus other dynamic variables. Intelligent Automated Dynamic Security Key Builders We have had created a number dynamic key builder for: 1. Cookies 2. Encryption 3. Compression 4. Packet Tracking 5. Chips Communications 6. Personal Security Key 7. Privacy Security Key 8. Group Security Key 9. Companies Security Key 10. Logging, Tracking and Audit Trial 11. Internal Hacker Indices 12. Other topics We would be brainstorming our Keys approaches with clients and teams whom we would be working with. Packets Tracking and Packets Rerouting: The goal of this section is to present how can we use Transmission Control Protocol/Internet Protocol (TCP/IP) and internet packets in Cybersecurity and prevent attacks such as Man in the Middle and Distributed-Denial-of-Service (DDoS). We need to get the readers or our audience to envision the internet communication and data exchange such as Internet Packets. Therefore, we need to present a number of definitions and concepts. We need to present how can we make our approach to Packets Tracking and Packets Rerouting easier to understand. We are not here to change the world: TCP is used extensively by many internet applications, including the World Wide Web (WWW), email, File Transfer Protocol, Secure Shell, peer-to-peer file sharing, and streaming media. Because most communication on the internet requires a reliable connection, TCP has become the dominant protocol. It operates at the network layer of the OSI model and it establishes a reliable connection through a process called the three-way handshake: Host 1 sends a Synchronization (SYN) Request to Host 2. Our proposal and approaches are not aimed to change TCP/IP nor enforce-impose anything. What we are presenting is our approach to how can we use TCP/IP in our Cybersecurity and hosting support. We are planning on using TCP/IP to handle hackers' attacks and their AI tools. Transmission Control Protocol/Internet Protocol (TCP/IP): TCP/IP is a set of rules that governs the connection of computer systems to the internet. It is a suite of communication protocols used to interconnect network devices on the internet. The Internet protocol suite, commonly known as TCP/IP, is a framework for organizing the set of communication protocols used in the Internet and similar computer networks according to functional criteria. What does Internet packet mean? An Internet packet is a group of communication information that is formatted, addressed, and sent using Internet communication protocols. Packet Structure:

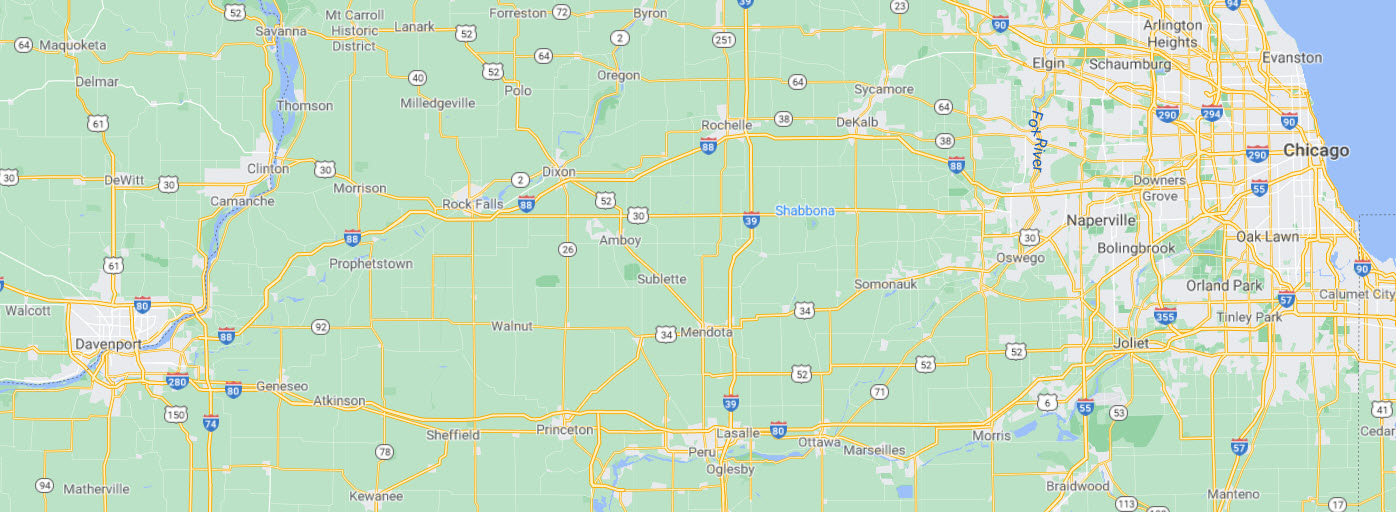

Packet Structure The Maximum Transmission Unit of a packet is set to 1500 by default. This would include all overhead of the IP packet headers and other protocols used during the transmission of the packet. If, in the transmission of an IPv4, a protocol adds to the size of the IPv4 packet, the packet will be fragmented to fit. Payload: Payload is called the body or data of a packet. This is the actual data that the packet is delivering to the destination. If a packet is fixed-length, then the payload may be padded with blank information to make it the right size. Payload refers to the actual data being transported by the packet. Depending on the network, the size can vary between 48 bytes to 4 kb range. The payload is the only data that is received by the source and destination, as the header information is stripped from the packet when it reaches the destination. Payload can be security vulnerabilities and almost any type of malware can be incorporated into a payload to create executable malware. Malicious actors, as well as penetration testers, use payload generators to incorporate an executable piece of malware into a payload for delivery to targets. Packet Routing: Packet routing is the forwarding of logically addressed packets from their source toward their ultimate destination through intermediate nodes. Routing is the process by which systems decide where to send a packet. Routing protocols on a system "discover" the other systems on the local network. When the source system and the destination system are on the same local network, the path that packets travel between them is called a direct route. How are Internet packets routed? When a router receives a packet, the router checks its routing table to determine if the destination address is for a system on one of its attached networks or if the message must be forwarded through another router. It then sends the message to the next system in the path to the destination. Routing Analogy: The internet is highways of data traveling from one computer network to another computer network. Data is packaged in small amounts of data known as Packets. There are rules and regulations governing the processes of moving these packets. A router receives and sends data on computer networks. To make an analogy of how data travels on the internet, let us look at Chicago-Davenport Possible Routes Image presenting the road map between Chicago, IL and Davenport, IA. The number of possible routes a driver may choose based on weather, time of the day, traffic, construction, ... etc, can be a handful. For example, 294-88 highways route may start with Chicago, Downers Grove, Dixon, Rock Falls, Prophetstown and end at Davenport. The same thing would apply to the internet routes where cities are replaced by computer networks.

Chicago-Davenport Possible Routes

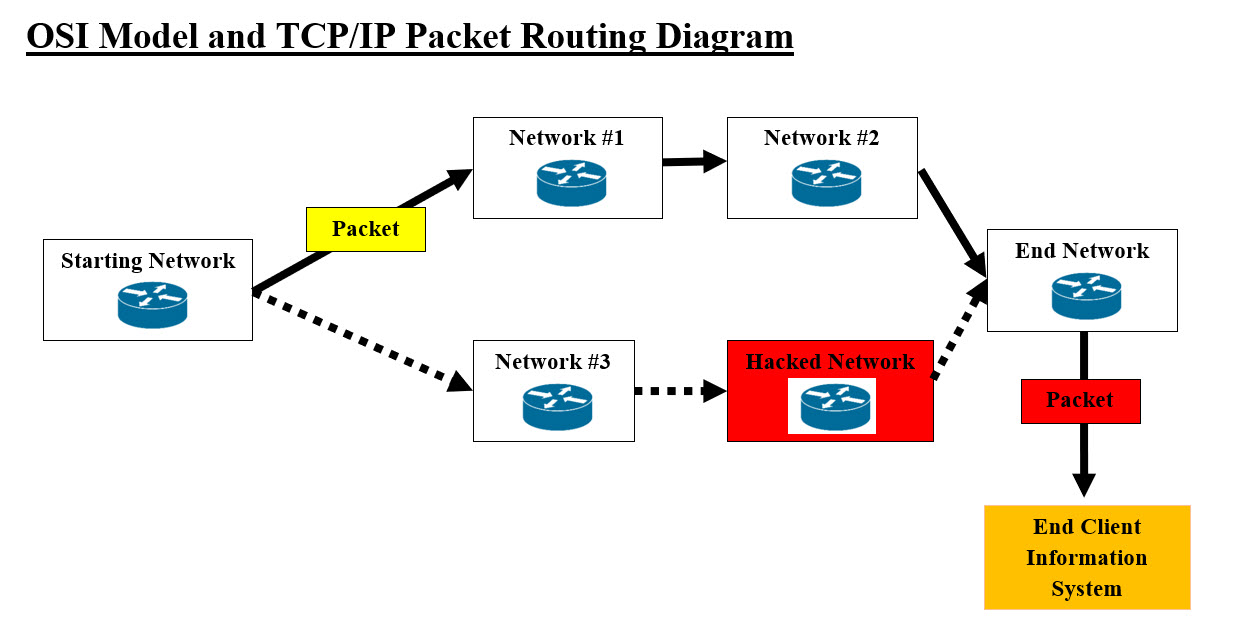

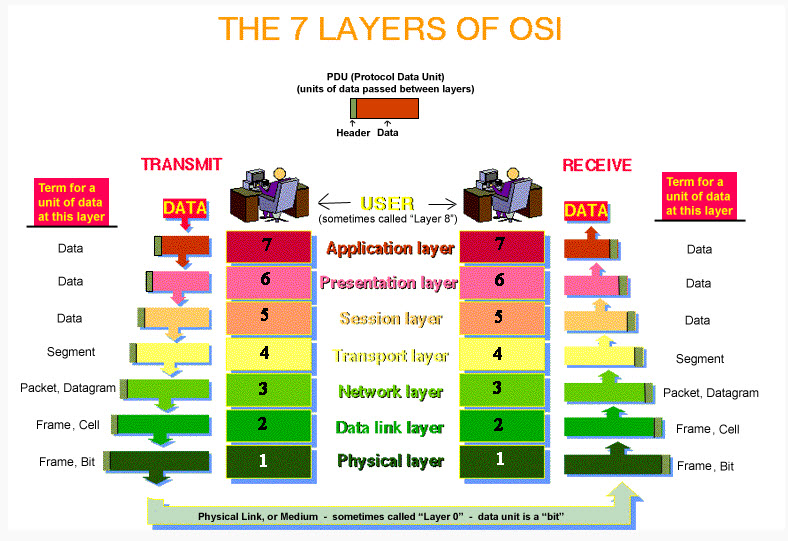

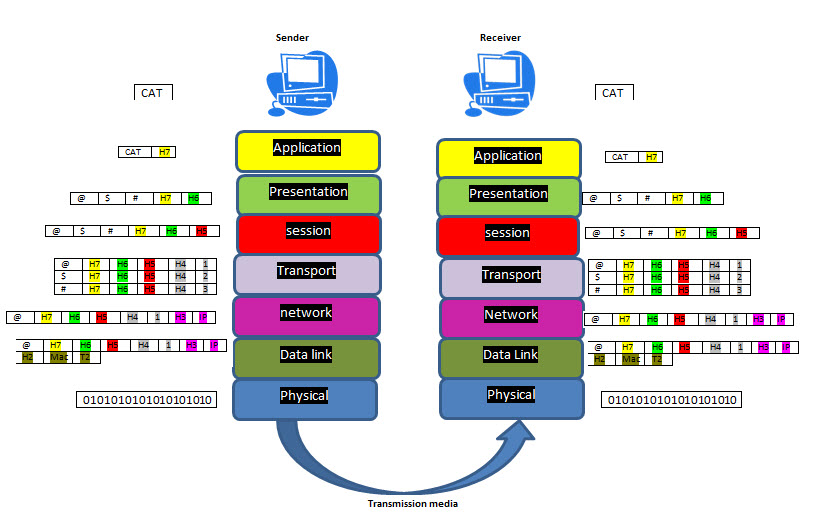

Packet Passage Routes Packets traveling through such routes would have to pass though each of the networks. There is nothing that can stop any of the routing networks from having a copy of each packet, damage the packets or stop the packets from going to next network. What we just described are some of the possible cyber security issues. As for privacy, both hackers and companies can use such data in these packets to their advantage. For example, mobile data can be accessed by other Apps running on the same mobile device. Open Systems Interconnection (OSI) Model: The OSI model shows how data is packaged and transported from sender to receiver. Open systems Interconnection: The open systems interconnection (OSI) model is a conceptual model created by the International Organization for Standardization which enables diverse communication systems to communicate using standard protocols. Levels of Networking The Open Systems Interconnection (OSI) model describes seven layers that computer systems use to communicate over a network. The modern Internet is not based on OSI, but on the simpler TCP/IP model. However, the OSI 7-layer model is still widely used, as it helps visualize and communicate how networks operate, and helps isolate and troubleshoot networking problems. Layer 7-Application - Data Layer 6-Presentation - Data Layer 5-Session - Data Layer 4-Transport - Segment Layer 3-Network - Packet, Datagram Layer 2-Data Link - Frame, Cell Layer 1-Physical - Frame, bit The image #4 and #5 present the Open Systems Interconnection (OSI) which is made up of seven layers.

Open Systems Interconnection (OSI) - Image #4

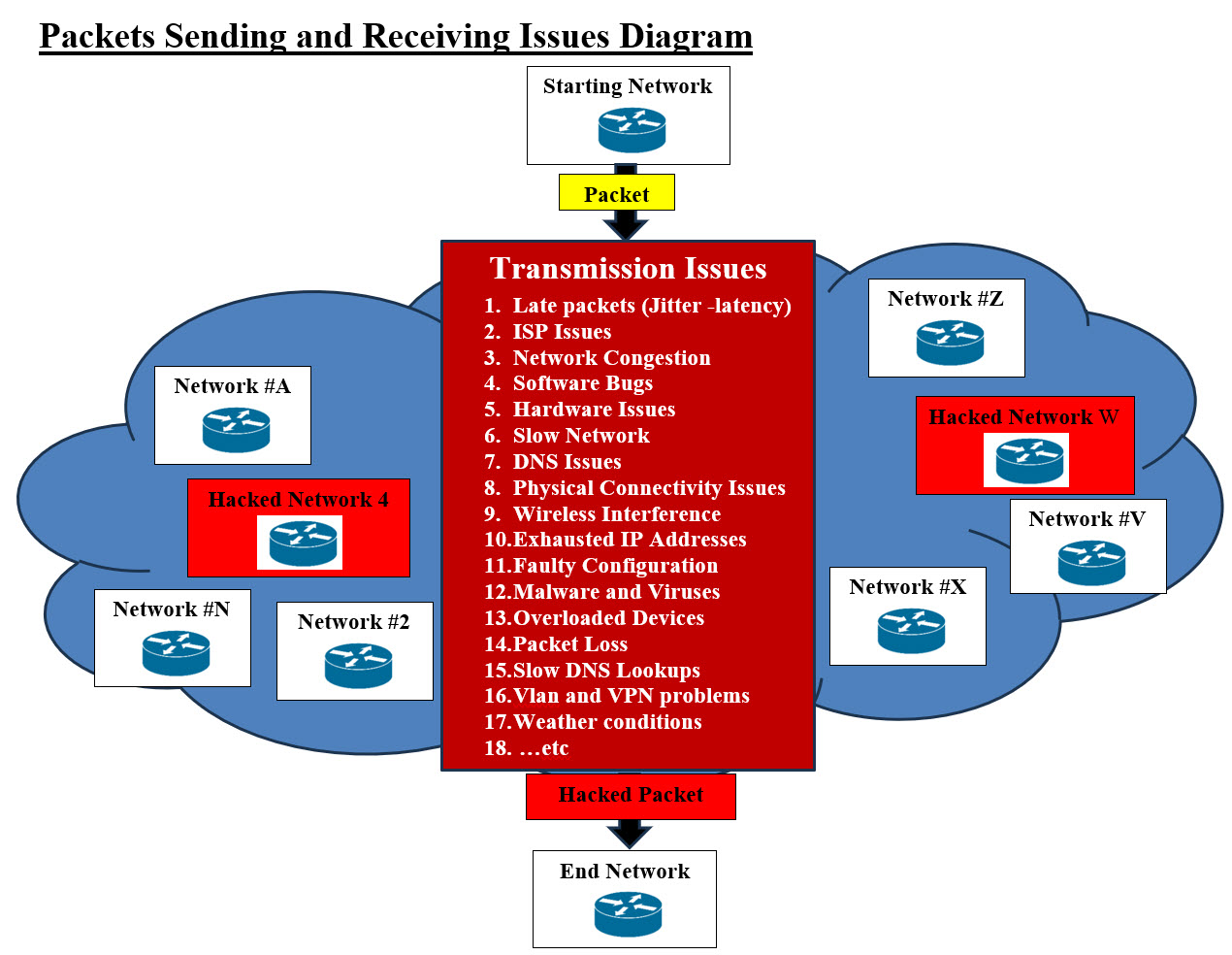

Open Systems Interconnection (OSI) - Image #5 The OSI model shows how data is packaged and transported from sender to receiver. Connections There are 7 Types of Computer Networks: 1. Personal Area Network (PAN) 2. Local Area Network (LAN) 3. Wireless Local Area Network (WLAN) 4. Metropolitan Area Network (MAN) 5. Wide Area Network(WAN) 6. Storage Area Network (SAN) 7. Virtual Private Network (VPN) Our View of TCP/IP and Packets Issues: Transmission Control Protocol (TCP) is a communications standard that enables application programs and computing devices to exchange messages over a network. We view TCP/IP and Packets issues to be composed of two parts: 1. Running Processes 2. Transmission (Sending and Receiving) Packet Running Processes Issues: We do want to mention that TCP/IP and Packets are a great success and what we are presenting is not any shape or form a criticism or downgrading. The sad news is the overwhelming of packets transmissions and processing may need a different view point to help add some sort of structure and ID system. Our attempt is to simplify the both processes and transmissions of packets. The number of networks, the types of networks and running processes have issues and the following is our view of some of these issues: 1. The number packets going through any node or network can be infinite 2. Storing any data is almost impossible 3. Tracing the packets is not easy 4. Data or Payload can be modified or have malicious code 5. Attackers can generate numerous numbers of bogus packets and jam the server 6. Sequence of packets can be an issue – out of sequence 7. Network congestion 8. Problems with network hardware 9. Software bugs 10. Software threats 11. Overloaded devices 12. Wired vs. wireless networks 13. Faulty configuration 14. Delay of packets 15. Routing can be an issue 16. Have option in choosing routes 17. Redirect of packets to hackers' sites 18. Issues with connections and transport 19. Using Ping and Man in the Middle and Distributed-Denial-of-Service (DDoS). Our View of Packet Issues: Our attempt in this section is to put a picture with packets issues and how both packet senders and receivers have to go though to get the packet's transmission completed.

Packets Sending and Receiving Issues Diagram - Image #6 Image #6 presents a rough picture of how networks are distributed on the internet-cloud where some of these networks could be hacked. Both packets' senders and receivers could have issues during the packets' transmissions. The sending and resending could also be repeated several times until the receiving network gets all the packets. Note: There is no control once the packet starts its journey to the receiving networks. Plus, the resending of packets may go through a number of resending to complete the packet transmission. Not to mention that hackers can interrupt or damage the packets.

Payload within Packet Structure - Images #7 Packet's Payload: The size of a packet's payload can vary from 48 bytes to 4 kilobytes, depending on the network. The payload is the data that is transported by the packet and is the only data that the source and destination receive. The packet size generally includes a header and a payload. The header contains the IP address and port of the source and destination network device, while the payload contains the message or data content. It is about 1460 bytes. Image #7 highlights the payload. Our attempt in this section is to show how can we use the Payload or data field within the packet to help put some sort of identification and control to help manage the packets' trips. We are proposing Packet's Cookies approach and create some sort of transmission structure. What is the cost (in term of performance) of adding three bytes as tracking bytes to the Payload? We will address such concern later in this section. Packets Sending and Receiving Issues: The following is a list of packets' transmission issues plus hackers' attacks can also add more issues. For example, hackers can overwhelm a site with bogus packets, corrupt the packers, redirect them to their own site or someplace else. 1. Late Packets (Jitter and Latency) 2. ISP Issues 3. Network Congestion 4. Software Bugs 5. Hardware Issues 6. Slow Network 7. DNS Issues 8. Physical Connectivity Issues 9. Wireless Interference 10. Exhausted IP Addresses 11. Faulty Configuration 12. Malware and Viruses 13. Overloaded Devices 14. Packet Loss 15. Slow DNS Lookups 16. Vlan and VPN problems 17. Weather Conditions 18. ... etc. Packets' Payload as Cybersecurity Tools: The number of packets transmitted on the internet is literally infinite. The number of packets going through any node or network can be infinite and storing any data is almost impossible nor practical. Tracing the packets is also not easy. Points of Concerns of What Hackers Know and Would Do: Before we present our Payload Security Approaches, we need to present a picture of the communication tracking of the following: • Internet users (Users, Internet Service Providers and internet Networks) • What are the tracking methods? IP, DIP and MAC Addresses Communication Tracking Diagram - Images #8 Media Access Control: A Media Access Control (MAC) address, sometimes referred to as a hardware or physical address, is a unique, 12-character alphanumeric attribute that is used to identify individual electronic devices on a network. An example of a MAC address is: 00-B0-D0-63-C2-26. What is a Dynamic IP (DIP) address? A dynamic IP address is a temporary address for devices connected to a network that continually changes over time. An Internet Protocol (IP) address is a number used by computers to identify host and network interfaces, as well as different locations on a network. Internet Users (Users, Internet Service Providers and internet Networks): Image #8 presents a rough picture of how different users, companies' networks, hacked networks and hackers' network are running on the internet or the cloud. The number of networks and users is in the billions. For tracking all these participants, there must exist communication identification methods using IP addresses, Dynamic IP addresses (DIP), and devices' IDs. Devices are identified by their Media Access Control (MAC). What are the tracking methods? Image #8 shows how internet User #1 would be connecting to the internet with the help of a Service Provider Company. The service provider supplies the user with a Dynamic IP address. DIP would change every time the User connects with Provider network(s). The user device (Computer or a phone) would be identified by its MAC address. MAC address identifies the physical address of a device on the same local network. In other words, service providers track their users with both DIP and MAC. Image #8 shows that packets are transported from User #1 to User #Z may pass through a number of networks. These networks can be service providers networks, legitimate networks, hacked networks or even hackers' networks. Hackers can obtain MAC addresses by hacking service providers networks. Not to mention, IP addresses of source and destination are available for the taking from the packets. The service providers and their clients-users should be the only ones who should have the knowledge of DIP and MAC and no one else should not be able to access them. The reality is that hackers can capture both DIP and MAC. In short hackers can capture IP, DIP and MAC and be able to impersonate any User, any Network or any service provider. A man-in-the-middle (MitM) attack is a type of cyber-attack in which the attacker secretly intercepts and relays messages between two parties who believe they are communicating directly with each other. We are proposing Packet's Payload Cookies approaches to address hackers' a man-in-the-middle (MitM) attacks and other hackers' attacks. Image #8 also shows what we are also proposing:

Looking at Packet structure in Image #7, the packet is very much loaded with information which the network uses in sending the packet. There is no room for addition tracking information or ID except the Payload. In other words, can we use a part of Payload such as one or more byte in tracking and ID for the packets. For example, Cookies is proven to be a good tool in ID or security parameters. Sending and Receiving Ends Device Types: The Start and The End devices handling Packet's transmission can be one of the following:

Regardless of the Start and End devices receiving packets, we are focusing on the Payload and how it should be processed by software handling the data. Packet's Payload (Data): It is very much: • A string of bytes • Has a fixed size. Our Packet's Payload Cookie Byte String Structure: At this point in time, we are proposing the addition of three bytes to the Payload as our Payload Cookie as shown in the Cookie Bytes Table. Our attempt is to keep hackers including internal hackers from figuring out our system even if they are using AI. Changes can be easily implemented using templates and timely scheduled patterns.

Payload Bytes String = Encrypted-Compressed String + 3 Bytes Payload Cookie 3 bytes Cookie can be inserted in any position in the Encrypted-Compressed String. For example: if the position number is 5 (00000101). This mean that the Cookie is located after the 4th byte in the Encrypted-Compressed String. if the position number is -5 (10000101). This mean that the Cookie is located before the end of the 4th byte in the Encrypted-Compressed String. Packet's Payload Cookies Scenarios - Compression-Encryption Algorithm Index: How can we use packets' Payload as tools in Cybersecurity? Using packets' Payload as a secuirty tool(s) is part of our hosting architect-design. Example of Packet's Cookie Payload Bit Presentation:

The job of software or the App loading or sending the packet Payload is: 1. Encrypt-Compress the Payload String 2.Create Our Security Payload Packet Cookie of three bytes = ID + Position in the Payload String + Encryption-Compression Index used 3. Insert the Payload Cookie in the Payload String position Sending and Receiving Synchronized Communication Scheduling Parameters Table: We like to remind our audience-readers that we need build our system with constantly changing IP addresses for our clients to access our services. This means, for example at 3:10 PM CST our clients would able to Access IP Address (cloud server or site) number AA310.com. After 4:10 PM CST clients' browsers would automatically switch to cloud server-site BB410.com. We are assuming that our cloud services have more than one than server and more than one internet connection. At this point clients' computers and their internet service providers would be sending packets to a totally different route. The attacking hackers' servers and their damaged packets would be lost and have connection errors. In the case which we only have one server but with more than IP addresses. We would be dropping current IP address and witching to a new one. All the packet directed to the dropped IP address would be lost and the hackers' network would have connection errors since the dropped IP Address is no-longer working. In the case where hackers change or damage the Payload String, then our Cookie system would be able to figure out that there are issues and start the needed processes for handling the hacking attacks. Packets' senders and receivers should have synchronized schedule for sending and receiving packets. The following are some of synchronized parameters. At this point in time, we are open for brainstorming these parameters: 1. Time 2. Process ID 3. Cookie ID 4. IP Address 5. Encryption Index 6. Compression Index 7. Hash Index 8. Starting Route ID 9. Contact ID 10. Handling Processes Index - Detection Processes 11. Destination ID 12. Source ID

The goals of having the synchronization table is to keep hackers from knowing what IP addresses would be used next since both senders and receivers would changing IP addresses as time passes. In the case there is an attack, then receiver would be able to contact the senders and request that they change the communication IP address to the next scheduled IP. Also, Receiver would be able to send alarms to every concerned party involved. The Handling Processes Index ensures no guessing of what to do in case of an attack takes place. The indexes are used to have all the parties involved are on the same page and know what to do when it comes to Hashing, Compression, and Encryption. Note: Both the packets' senders and receivers should have the needed software or apps to handle the parsing and the processing of encrypted-compressed Payload string (data). We need to address that the hackers can obtain a copy of parsing and processing software or Apps. They can use reverse engineering to figure out the capabilities of these tools. Not to mention, hackers are using AI, therefore, we need to create these parsing and processing software or Apps using templates, encryption-compression keys and system clock as timestamps, which would make it almost impossible to break or even figure out. Note of caution, hackers can be supported by big business if not governments, therefore we need to have creative and dynamic thinking and learn from history, others and as well as our mistakes. Attack Scenarios: We are presenting the following scenarios and how our Packet's Cookie would handle them. Man in the Middle: A man-in-the-middle (MitM) attack is a type of cyber-attack in which the attacker secretly intercepts and relays messages between two parties who believe they are communicating directly with each other. A man-in-the-middle (MITM) attack, also known as a monster-in-the-middle, person-in-the-middle, or adversary-in-the-middle attack, allows hackers to intervene between two trusted parties to steal personal information and impersonate users. Hackers can accomplish this through hijacking, eavesdropping, poisoning, or spoofing. The goal of an MITM attack is an identit theft. MitM hackers' attacks can be grouped under the following categories: 1. Routing Rerouting packets to the hackers' networks Damage routing information 2. Packet Sniff-copy the packet Damage the packet Payload Packet Injection - insert their malicious code in the Payload Poisoning Overcoming encryption 3. Overloading Server Overloading Server - Jam or overload the receiving network with endless number of packets Send bogus packets Delay the packets so they are out of sequence Force the packet sender to resend packets 4.Deceiving Eavesdropping (Spying) Spoofing (Deceiving) mDNS Spoofing (Deceiving) Impersonate the packet sender and also the receiver 5.Session Hijacking - Session Hijacking copy session ID SSL Stripping Our Answer: In this section, we will try to simplify our approaches by proving a picture of how to stop any intruder with Our Packet Payload Cookie approaches.

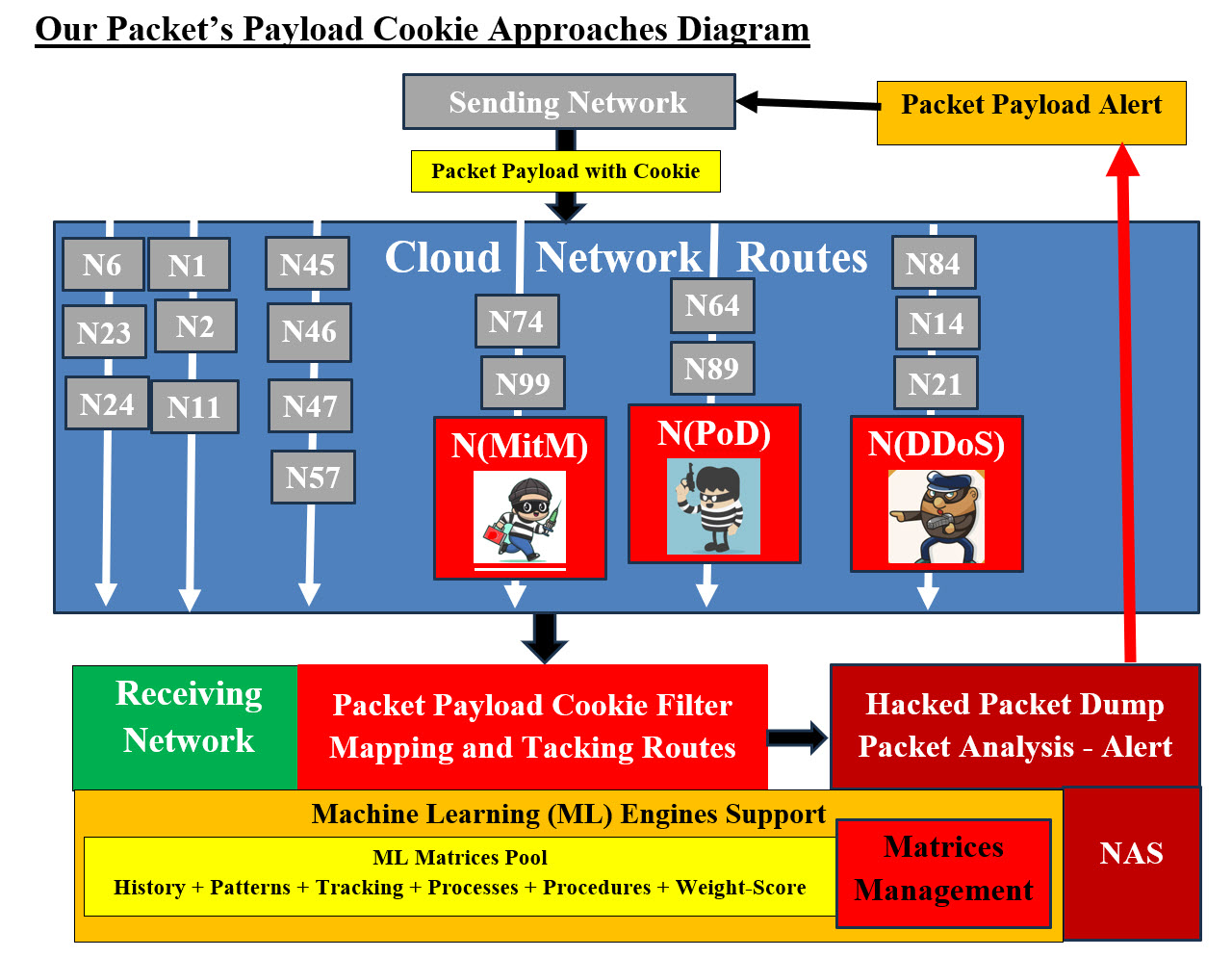

Our Packet Payload Cookie Approaches Diagram - Images #9 Image #9 shows how the sending network or user's PC would add the Payload Cookie to the Payload String. The packets would be traveling using a number of possible routes between networks including the N(MitM) network, N(PoD) Network, or N(DDoS) network. These hackers' networks would be able to perform a number of attacks on the packet Payload string as mentioned in MitM hackers' attacks categories. The Receiving network Payload string parser would be looking for all possible attacks; it would start a number of processes which would be implemented to trap the packets for analysis and alert if there are issues. Packet Dump for Analysis and Alert: The ability of the receiving networks to filter packets and dump them for analyze and alert as separate processes, would help speed the handling for the incoming traffic. Also, the analysis and alert would help the network to change to different IP or networks and avoid the hackers' routes. These would render any hackers' attacks to be of no value once the alert and routes switching are completed. Our Packet's Payload Cookie Approaches Diagram - Image #9 shows a rough presentation of how our Packet's Payload Cookie Approaches would work. In short, the receiving network (or User PC) Filter software or App would parse the packet's Payload string. It would be looking for the Cookie and the correct format. Any issue would trigger the Filter to dump the packet for further analysis and possible alert to the sending network to change the routing or check if there are any errors. Machine Learning: Machine Learning would be running in the background to support all the system software and Apps. ML Engines: These are software applications which support ML with all of its tasks. Engines create and use matrices to communicate and perform their specific tasks. Matrices Pool: These data matrices are the communication tools for ML Engines to consume or produce. Network-Attached Storage (NAS) and Using Filing System: Network-attached storage (NAS) is dedicated file storage that enables multiple users and heterogeneous client devices to retrieve data from centralized disk capacity. NAS devices are flexible and scale out, meaning that as you need additional storage, you can add to what you have. The goal of having NAS is to provide storage to have a hardcopy of anything important happening in the system. NAS would help in rollback and audit trail. NAS is a data storage which would be used to store Packets for further analysis. NAS would be used to load-start ML Engines and matrices which would give the system and ML flexibility to add more processes or applications-Apps on the run as needed. Matrices Management: There must exist a management system to help with matrices productions and consumptions, plus manage ML Engines. Mapping and Tacking Routes: The Cookie Filter would also would be mapping and tracking Routes for alerting all the concern parties. The goal is to identify hackers' networks or hacked networks in the packets' routes. Our Packet's Payload Cookie approaches is architected to address both hackers and their AI tools. Our approach is more of common sense and thinking outside the box. Both the required Cookie implementation software and Apps handling our approaches are not difficult to developed and maintained. Our attempt is to keep hackers and their AI tools trying to figure out: what to do and what is what? The development of the required Cookie implementation software and Apps are using: first-common sense, intelligence, templates, dynamic keys, timestamps, and let hackers and their tools in the dark and puzzled. Machine Learning would be running in the background to support all the system software and Apps. Routing Control and Scheduling: Routing is the basis for stopping hackers and their AI tools from being able to continue their attacks. For example, the networks sending and receiving packets must be able to switch to different routes on the run without delays. We are not the experts in routing categories, but we need to team up the experts for the sending and receiving networks and the browsers and their service-providers to be to do the following: 1. Routing should have a dynamic schedule so routes must be changed on scheduled basis 2. On demands rerouting of packets 3. Mapping of routes and tracking possible networks issues 4. Clients- Sever direct routes 5. Informing clients, users and other networks of possible issues with a particular routes or networks 6. Connection options such as: satellites, ground, cloud, cables, ground phone, other technologies, ... etc. 7. Routing vendors must develop software tools to help with routing options 8. Misc ... - we are open to other options. The internet big players must be the leaders in addressing routes issues and rerouting options. IP, DIP and hashed MAC: All the networks and service-providers must be able to provide alternative IP, DIP and hashed MAC to their clients and other interfacing networks. Packet: Our Packet's Payload Cookie approaches with Cookie' size, ID, position, encryption-compression index would help ID and recognize any change to the Payload-data (String of bytes). The encryption-compression index makes it almost impossible to undo since every company or networks would be developing their own unique encryption-compression software-Apps. Hackers' AI would need help with such an ocean of encryption-compression software and their methodologies. As for the browser-side encryption-compression-Payload Cookie must be developed with same diversity of each network and company. Such development is not simple, but doable and must be well tested and verified. Hackers Reverse Engineering as an AI tools would be busy trying to figure out what is what and when things would be changing on the run. Overloading Server: Once networks have the rerouting options and Payload Cookie checking, then hackers would need to figure out how to predict when-where-how to change their attacks. Deceiving and Session: Again, our Packet's Payload Cookie approaches would prevent anyone from knowing the content of Payload-data. This also helps networks and users recognize any intruder. Distributed-Denial-of-Service (DDoS): A distributed denial-of-service (DDoS) attack is a malicious attempt to disrupt the normal traffic of a targeted server, service or network by overwhelming the target or its surrounding infrastructure with a flood of Internet traffic. Our Answer: Our Answer to MitM addressing on demand rerouting, scheduled rerouting and Payload Cookie recognition would leave hackers attacks on any network fruitless. IP, DIP, hashed MAC and routes would have changed long time ago and the PIs are no longer in service. Hackers would be attacking ghost networks that their IPs, DIPs and hashed MACs are no longer exist. Ping of Death Attack: A Ping of Death (PoD) attack is a form of DDoS attack in which an attacker sends the recipient device simple ping requests as fragmented IP packets that are oversized or malformed. These packets do not adhere to the IP packet format when reassembled, leading to heap/memory errors and system crashes. Our Answer: Our answer is the same as what is mentioed in DDoS and MitM, using our approach of rerouting, scheduled routing, and Cookie recognitions. What is the cost (in term of performance) of adding three bytes as tracking bytes to the Payload? We believe that the overhead of adding the Payload Cookie processes to each packet is worth it. First, we are adding only three bytes to Payload string. The encryption and compression are standard procedures. The only added processes are: Pre, Post and Outside Processes: Pre - The sending network or user's PC: Format the Cookie according to a scheduled system or processes Inserting the Cookie bytes into the Payload's string Post - Receiving Network: Parsing the Payload string for the Cookie Analyzing the Cookie format Dump of Cookies Outside Processes: Cookie analysis Alerts At this point, we cannot give the exact time of how time each added process would take. These processes are very simple to implement plus the receiving networks must wait for all the packets to be completed. We recommend that a number of parallel parsing processes would be sharing the same data buffer. This buffer would have the Payload Packet String (including the Cookie). Each would have its own flag in the case there are issues with Cookie security parameters. The waiting time for the packets complete transfer to be done can be used to run these added processes. Scheduling of Events - Synchronization We need to address the fact that hackers have limited scope and all their energy, teams, support and tools including using AI have few tasks. They have all the time in the world for trails and errors. They may have inside help also. They would do what it takes to find vulnerabilities. Answer is as follows: What does it mean to keep them guessing? 1. To make it impossible (for someone) to know what will happen next 2. Be deliberately unpredictable. 3. No predicable patterns by creating situations that are ambiguous or confusing 4. Open up possibilities for a miscommunication 5. Use math and bitwise keys for creating small dynamic templates and patterns 6. ... Open to suggestions We recommend Using: 1. Multiple IP and VIP addresses 2. Using Virtualization 3. Scheduled- Synchronized communication protocols and tracking 4. Audit trail and Machine Learning to track and find patterns, errors and methodologies 5. Use system clock for timestamps - helps keep things recent and up to date 6. Use Index and Hashing 7. Using Machine Learning to track processes-procedures and analysis of what is working and what is not 8. Using Machine Learning to track hackers' success and failures 9. Lookout: all parties involved must on the same page plus anyone can be used by a hacker or being hacked 10. Use security buffers and virtual servers Creation of Collations of Companies and Vendors: Teamwork and brainstorming are definitely a plus and we need to create collations of teams and share resources. The following is what we recommend: Vendors, companies and governments must create collations to share resources and help Such collations will render hackers dealing with a lot bigger defense and more cooperations Machine Learning and Matrices We have had done a number pages and YouTube Videos and the following are the links for what are available on the internet. We also added another section following the links explaining our approaches explaining Security Services Engines and Matrices. Machine Learning and Cybersecurity Our ML presentation is composed of: • How do we view ML as concept? • Our approaches, tools and platform. Check the following links on SamEldin.com under projects: Concept: • Machine Learning Sam Eldin Current Projects: 3 - Sam's Machine Learning Analysis, Data Structure-Architect 4 - Virtual Edge Computing with Machine Learning 4.1 - Case Study - Electric Vehicles 4.2 - Case Study - Autonomous Vehicles 4.3 - Case Study - Oil and Gas Refinery 8 - Object Oriented Cybersecurity Detection Architecture Suite 9 - Our Compression-Encryption Cyber Security Chip Weight-Score Engine: This is turning the evaluation and the decision-making into numbers for faster processing and adding intelligence. Inventory of All Services: The goal of the hackers is to create problems or do damages to the running system. Backups and Rollback are critical to offset any hackers' attacks. There should be three inventory frameworks: 1. Static Inventory 2. Dynamic Inventory 3. Rollback Inventory These inventories must be created without causing delays or performance issues. Therefor, there must be a written processes and procedures of creating these inventories and these processes and procedures must be well tested and confirmed-approved. Note: Some of our recommendations may not be doable by some companies, but these are our recommendation. Not all the inventories can be achieved by development teams and testing teams. Static Inventory: List and copies of all running software including the Operating System. Dynamic Inventory: Our approach in providing any services would be done using our Virtual Business Unit Brokers. In short, any running system would be loaded into a Virtual Machine (VM) with all its Operating System (OS) and resources. Once such a system is up and ready for service. There should a procedure to save a copy of the running system (VM) to NAS. This would help with the recreation of the system from NAS and not starting it again from scratch. This step would save time and effort and consistencies for tracking by our ML or teams of experts. It would help in fast reloading of such a running system. Rollback Inventory: The same way of saving a running system to NAS at the start of the system, the rollback inventory would be done at a safe point in the running system without interruption or delaying the running system. Backup and Rollback: All the three inventories presented are our rollback approaches, where we can reload the running system from NAS. The systems saved in NAS are our backup for any running systems. We just presented our Backup and Rollback approaches and we are open to others approaches also. Audit Trail Audit trail would be creating matrices for Machine Learning to process. Reverse Engineering Services Vendors software of the reverse engineering services would be used to look for hackers' code or any discrepancies. Virtual Testing and Grading of Each Service Virtual testing, grading running system and creating hackers' attacks' scenarios must be done to ensure that our system would be able to handle any hackers' attacks. Automated Testing and Assessments Using Machine Learning Automated Testing, Assessments and Machine Learning tools are the needed components to be able to perform analysis, cross-reference, search patterns, tendencies, history, ... etc. Matrices as shown in Image #5 would be the communication data for all Automated Testing, Assessments and Machine Learning tools to perform their jobs. What are the most common types of cyberattacks? 1. Malware 2. Denial-of-Service (DoS) Attacks 3. Phishing 4. Spoofing 5. Identity-Based Attacks 6. Code Injection Attacks 7. Supply Chain Attacks 8. Social Engineering Attacks 9. Insider Threats 10. DNS Tunneling 11. IoT-Based Attacks 12. AI-Powered Attacks 13. Zero-Day Exploit 14. Zero-Day Attack How often do businesses get hacked? An estimated 54 percent of companies say they have experienced one or more attacks in the last 12 months. Testing System Components Plus Automation: Our view of automation is building roadmaps of processes and steps. These roadmaps can be used by human with their intelligence and machine with their astonishing speed and great number of possible options. Such speeds and options would be used to add intelligence. First, looking at any network, there are hundreds of thousands of items running on any giving network (software and hardware). To handle hundreds of thousands of items, automation is must ant not an option. Tracking or testing these items is a challenge. Divide and Conquer - Logical and Physical Units: Our approach is "Divide and Conquer". Therefore, we need start with one target network or small network and divide it into logical and physical units. We build what we call Matrices of processes or steps. For example, the following matrix in Component Testing Matrix - Images #5 would be used by human and machine to perform a number of tests or tasks. Any task or a test should be a small script which runs by human and/or machine. Such script should return a value to the caller for tracking and feedback. The script starting and the ending time is critical in evaluating performance. Not Applicable (N.A.) would be used so we can build big matrices with Nemours items. Some tests may not be applicable by some items. System Components' Testing Check List Matrices - Image #5: We need to identify all possible hacking signs and their corresponding testing and detection. We need to develop automated and manual test matrices for all software and hardware system components. Both ML and Testers would be populating theses matrices. The test result would be: • Pass (P) • Fail (F) • Not Applicable (NA) Any item can be an entry in a logical as well as a physical unit. Therefore, we can check the physical or logical independently at our convenience. The scoring or performance is critical to our intelligent analysis and processes. The manual testing of these matrices must be manageable and doable by human. As for the machine testing, the sky is the limit to number and frequencies of testing as long as the testing does affect system performance. Virtualization can also play a big role in testing. We can clone the tested unit on a virtual server and run with such virtual server and perform anything we wish to test with such virtual independent server. Virtual testing would not interfere with system performance. Intelligence: To simply intelligence in our case, we are using the data we have collected from these matrices to run a number of scenarios (100 or more) and give each scenario a score or a grade. Based on the score, the machine would be able to make a decision, but in reality it is a calculated guess (100 or more). Since, we have the data for recent events plus history of previous events, then our machine would be able to perform cross reference, deduction, probability, decisions, statistics, reports and find frequencies, patterns and tendencies. Total Score and and Assessments: Each component Testing would have a total score for further analysis. The test score can be used to flag any issues to start the detection processes. Test ID and Scheduled Test Time: We need to build Test ID and its Timestamp based on scheduled testing. Cross-refence the ML matrices and Testers Matrices for further analysis and possibility of raising red flags.

Image #5 presents a sample of all assessment matrices which would be created. Audit Trail and Tacking would also produce data matrices. Machine Learning (ML) support tools would be parsing and analyzing all theses matrices plus the cross-reference of these matrices which are also data matrices. These matrices present Big Data and ML would be the tools we develop to help processing such Big Data. Intelligent Automated Hosting Management System: We need to present some internet definitions of Hosting Management plus AWS hosting management. We will also present our views of Hosting Management. What is hosting management? Managed hosting is an IT provisioning model in which a service provider leases dedicated servers and associated hardware to a single customer and manages those systems on the customer's behalf. How AWS Managed Service Providers Help: Companies increasingly look to the public cloud to ignite innovation and uncover new ways to drive future growth. With managed cloud solutions, businesses are able to deliver stronger customer experiences, deeper insights, increased uptime, and reliability from the flexible economic models. However, cloud is complex. Achieving these outcomes through cloud solutions can be complicated. New skills, new tools, and new processes are often required. Many businesses turn to AWS manage services providers like TierPoint for guidance. AWS Management Services: The Managed AWS services can remove the burden of repetitive tasks and offer high levels of support, freeing up your IT teams from managing the day-to-day operations while also helping you control IT costs, migrate important data to the cloud, and speed up your business transformation. Our Hosting Management Views: We would like to mention that there are two types managements at hand as follows: 1. Hosting Services Management - The Business of Hosting 2. Clients' Hosting Management - The Services Provided Hosting Services Management - The Business of Hosting: Intelligent Hosting Services Management is what we are architecting-designing. We also implement Machine Learning (ML) as part of the management. To simplify our approach, we would like our audience to think of management as the following parts: 1. Data - data matrices 2. Automation - using templates, processes, procedures, tracking and audit trial 3. Intelligent Processes - Using processes, reports, ML, human ingenuity and human experience 4. ML and Leason Learned Data: Data Matrices: Everything running and communicating with the running system must create data matrices of their status. Automation: using templates, processes, procedures, tracking and audit trial: 1. Tracking and audit trail would be analyzed by ML to create more data matrices 2. Data Matrices would be cross-refences to create data matrices 3. The created data matrices will be parsed to populate templates 4. Processes and procedures would use both data matrices and templates to perform their steps and create reports 5. ML would create Decision-Making Reports and matrices 6. Human managers would be using all the matrices and reports to make decisions Intelligent Processes: Using processes, ML, human ingenuity and human experience: Human managers with the help ML would be the ones who make decisions. ML and Leason Learned: ML would create Leason Learned data matrices and reports. Clients' Hosting Management - The Services Provided: As for Clients Hosting, they would be using our Intelligent DevOps Editors to run their system. |

|---|