with Machine Learning ©

|

Sam's Virtual Edge Computing

with Machine Learning © |

|---|

|

Sam's Virtual Edge Computing with Machine Learning

Introduction: Sometimes, it is very hard to see the actual benefit of a new solution such as Edge Computing Boxes-System. It seems that all the big and small enterprises are singing the same Edge Computer Boxes song as if Edge Computing Boxes is the only way out of Data Centers' distance, latency, capacity, operations, cost and data streaming. We believe Edge Computing Boxes would create another layer if not layers of an overhead in security, maintenance, performance and what else? It is kind of premature at this point in the game. Sadly, hardware vendors are pumping Edge Computing Boxes-System to meet the expected new demands of solving latencies and the risks associated with latencies. Number and Locations of Edge Computing Boxes: The second issue of the Edge Computing Boxes-System is how many Edge Computing Boxes would be needed to handle the growing demands. As for the Boxes' locations and what would be associated their locations is a can of worms we would not need to open. From our view point, Edge Computing Boxes-System solution is not the answer, but it is the start of new issues including Cybersecurity. Running Applications, Edge Computing and "one size fits all". The reason Edge Computing was created is to help application handle issues with Data Centers' distance, latency, capacity, operations, cost and data streaming. Current Edge Computing is not customized to handle each running applications' needs, but it is more of "one size fits all". Our Answer, Autonomous Vehicle (self-driving vehicle) and Machine Learning: One thing we can learn from our surrounding is that the answer is in front of us but we cannot see it. Our answer to Edge Computing can be found in autonomous vehicle (self-driving vehicle). For example, a fully autonomous vehicle needs an Edge Computing Box inside it. Such autonomous vehicle generates as much as 40 terabytes (Terabyte is equal to 1000 gigabytes) of raw data an hour from cameras, radar, and other sensors. Therefore, any nearby Edge Computing Box would chock on the such data. Not to mention, if such Edge computing Box has to deal with hundreds if not thousands of autonomous vehicles. To us, a fully autonomous vehicle can be considered another Data Center which is driving around. A fully autonomous vehicle needs a local Edge Computing system with it, which has Machine Learning capabilities to do the following: • Processes the new data and filter what is needed • Converts such data into small format • Compresses-encrypts the new data for securing and sending the data to the cloud • Learns as it runs the fully autonomous vehicle • The components of autonomous vehicle should have intelligence and handle their own issues • Management system of the entire autonomous vehicle which is hacker proof With the same talking of building a local system with Machine Learning support, we can apply such system to oil refinery, oil drilling in the middle of the Oceans, satellites, operating heavy machinery, ..etc. We believe that "The Inside-Out" approach is guided by the belief that the inner strengths and capabilities of the system will produce a sustainable future. Our Goal: Our main goal is to present our Virtual Edge Computing with Machine Learning system as a replacement of Edge Computing Boxes-System. We believe Edge Computing Boxes-System is an overkill, an added layer(s) and an overhead in security, maintenance, performance, cost, ..etc. With the assumption that our audience are both non-technical and technical with different backgrounds. We need to define what is Edge Computing in short. We also need to cover certain topics and then present our approach. The following are this page's topics: 1. Data Centers 2. Data Centers' Issues 3. Data Center Latencies Issues 4. What is Edge Computing 5. Our Virtual Edge Computing Answer 6. Machine Learning 7. Number of Our Edge Computing and Their Locations 8. Added Security Layer 9. Case Studies a. Electric Vehicles b. Autonomous Vehicles c. Oil Refinery 1. Data Centers

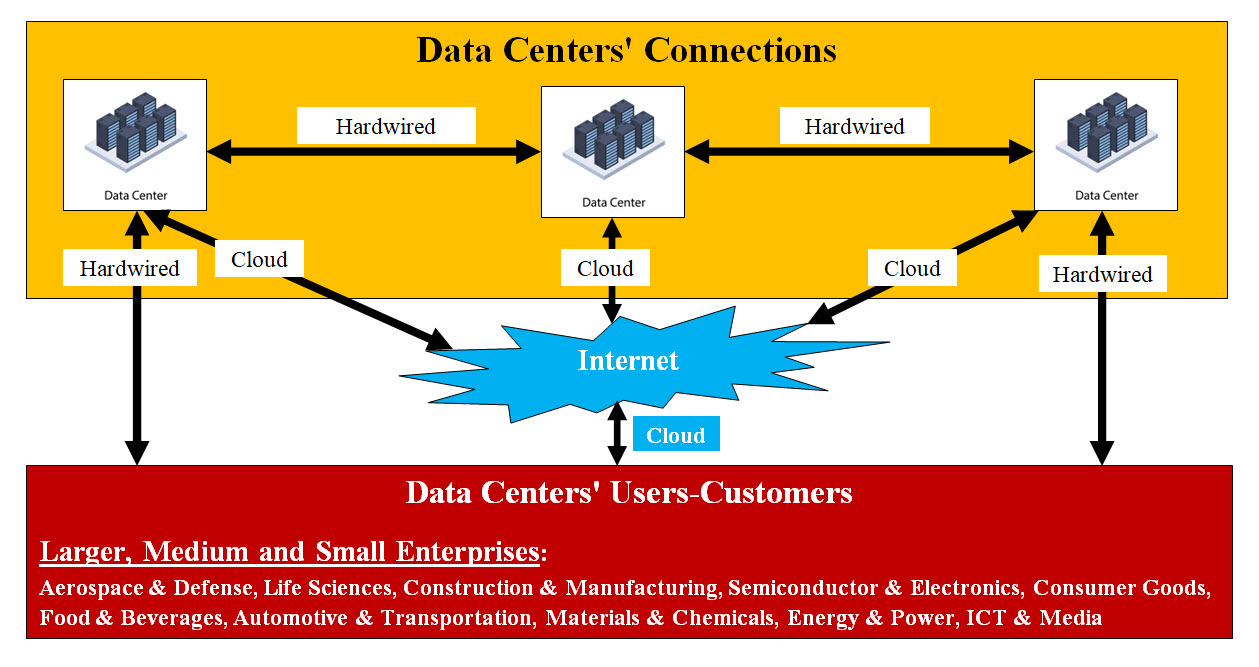

Image #1 What is a Data Center? A data center is a facility that is equipped for the purposes of storing, processing, and distributing data and applications. Data centers support almost all computation, data storage, and network and business applications for the enterprise. Data Centers are used by government agencies, educational bodies, telecommunications companies, financial institutions, retailers of all sizes, and the sellers of online information and social networking services such as Google and Facebook. Pros and Cons of Data Centers Pros: Greater Control, 24/7 Access Cons: High Cost of Labor, High Capital Expenditures, Physical Space Limited Colocation A colocation facility is a data center facility in which a business can rent space for servers and other computing hardware. Interconnection In short, it is the cooperation between different vendors to share a location to service all of them. Data Center Connectivity: There are numerous connectivity options and types including cross connects, dedicated and blended internet, cloud direct connects and interconnection. Image #1, is a rough presentation of Data Centers and Colocation and Interconnection. Data Centers Synchronization: Data synchronization is the ongoing process of synchronizing data between two or more devices and updating changes automatically between them to maintain consistency within systems. There is a number of data centers support such as Oracle GoldenGate which provides for real-time data integration, transactional data replication, and data comparison across heterogeneous systems, transactional change data capture, and data manipulation 2. Data Centers' Issues Managing data center is around-the-clock job plus identifying and applying data center challenges is a key to businesses success. The following is what we need to address and see how Edge Computing is trying to provide answers to such issues. • Management • Distance • Capacity and Capacity Planning • Security • Operations • Cost 3. Data Center Latencies Issues What is data center latency? Latency is the measure of how long it takes for the data to reach its destination. Latency is the delay of the incoming data. Typically a function of distance, latency is the measurement of the time it takes data to travel between two points. Data Centers have thousands of servers hosting hundreds of thousands of applications. A network latency would result in major problems with applications' performance. Latency can also be affected by number of users and devices connected to the internet servers. 4. What is Edge Computing Searching the internet, we found the following definitions: What is Edge Computing? Edge computing is a distributed computing framework that brings enterprise applications closer to data sources such as IoT devices or local edge servers. The purpose of edge computing is to deliver computing capability to the very edge of the network. With the development of edge computing, the proximity to data at its source can deliver strong business benefits: faster insights, improved response times and better bandwidth availability. What is Point of Presence or POPs? A point of presence (PoP) is a demarcation point, access point, or physical location at which two or more networks or communication devices share a connection. Our View of Edge Computing

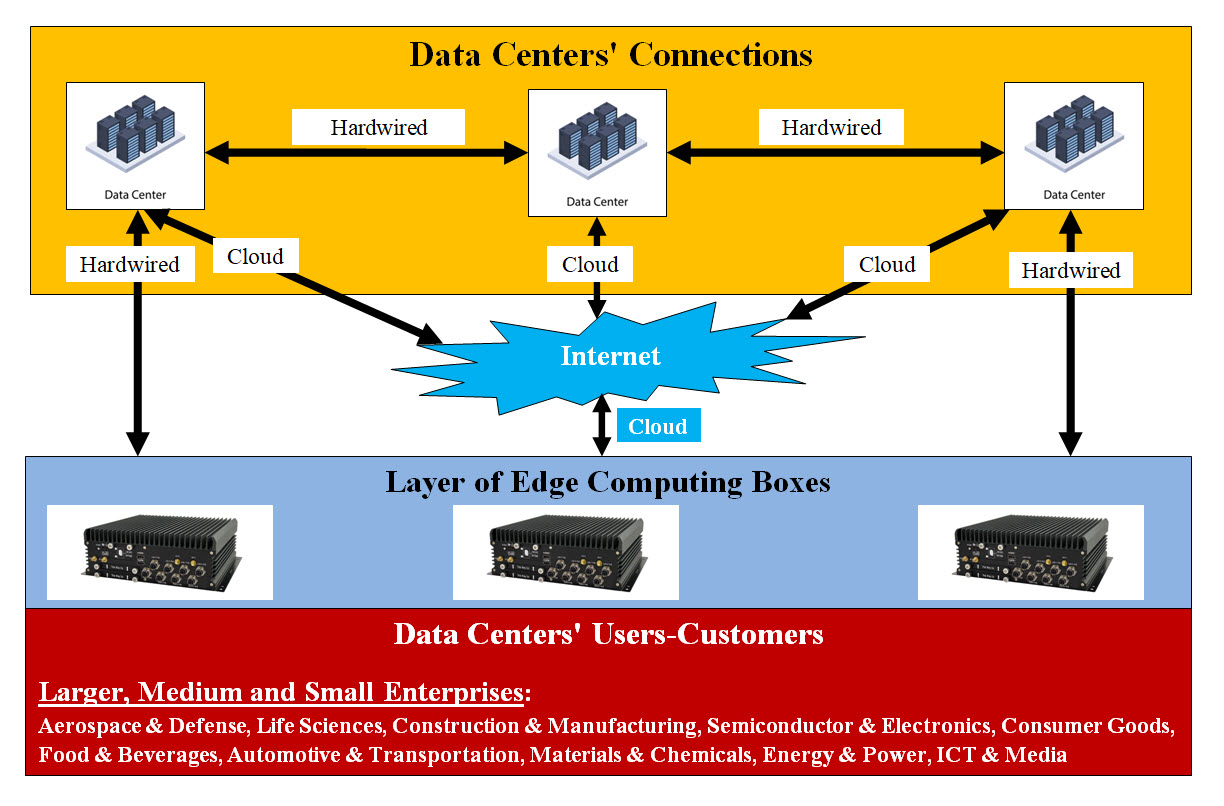

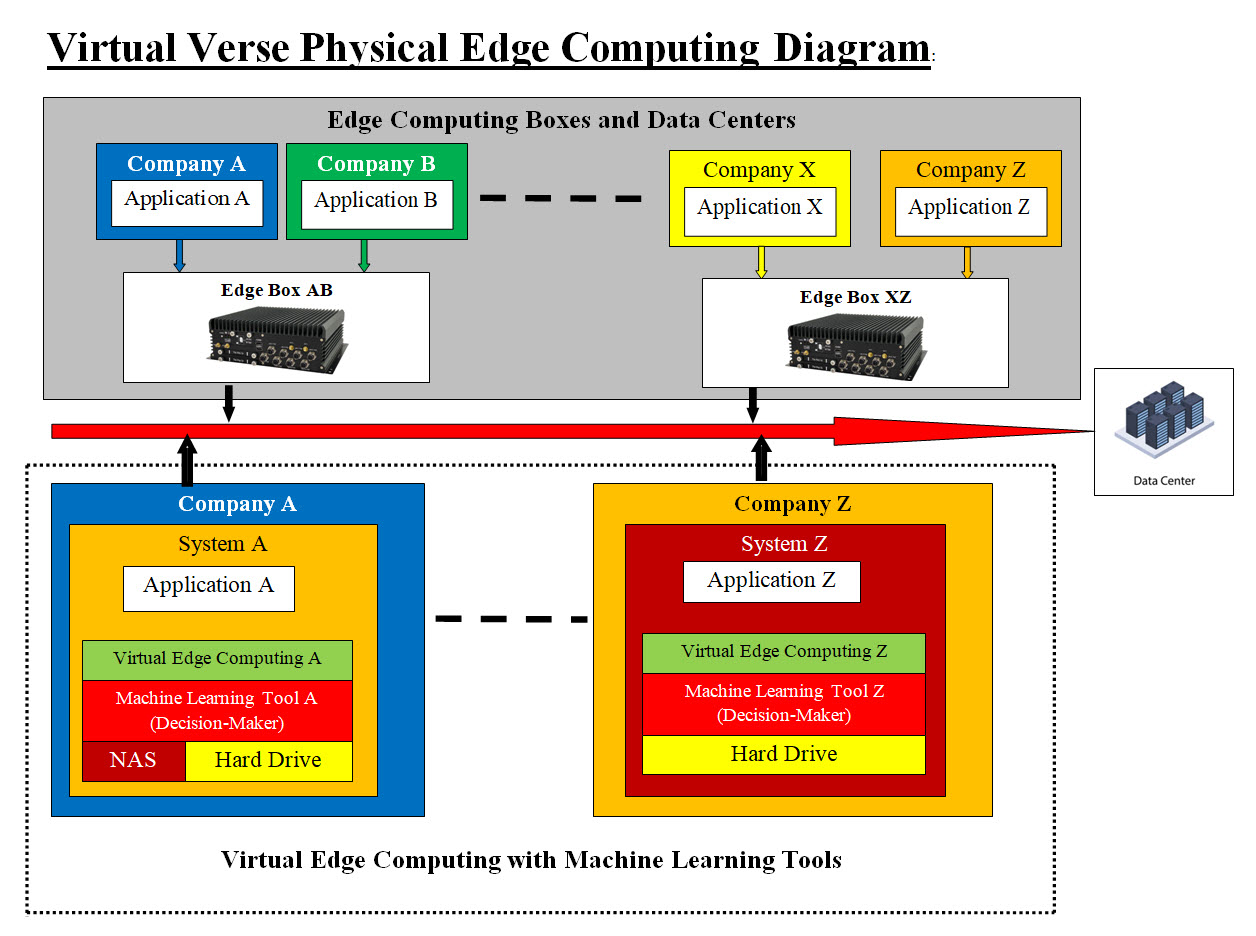

Image #2 Our View of Edge Computing: Image #2 is a rough draft of what we see Edge Computing, where Edge Computing is an added layer (physical hardware) of Edge Computing Boxes and an added interface to data centers. We have the following questions: Does Edge Computing Boxes address Data Centers' Issues? • Management - add more tasks • Distance - questionable • Capacity and Capacity Planning - questionable • Security - add more security risks • Operations - add more work and maintenance • Cost - increase the cost We do not see any advantage in using Edge Computing Boxes, and sadly they add more risks, workload and what else, it is kind of premature at this point in the game. 5. Our Virtual Edge Computing Answer:

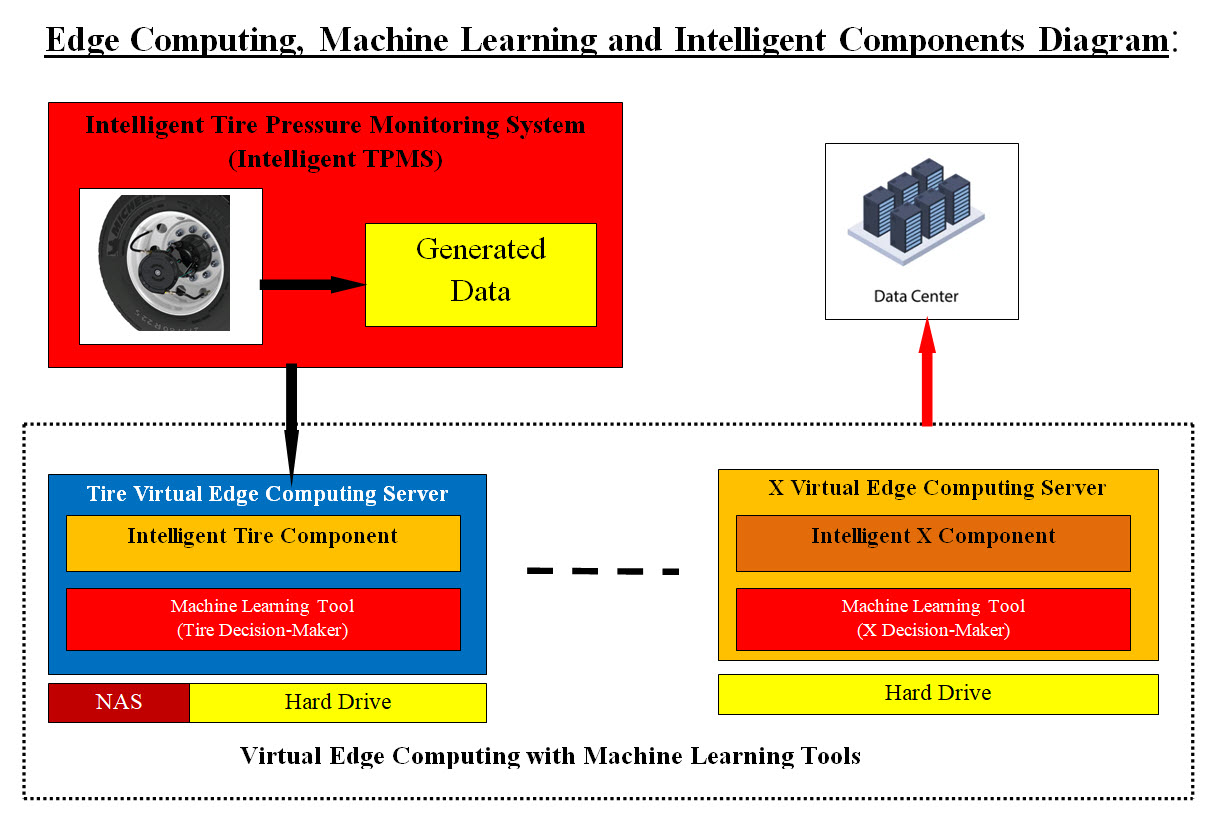

Image #3 Image #3 presents both Edge Computing using Edge Computing Boxes and our Virtual Edge Computing Server plus Machine Learning supporting Decision-Maker. Our Virtual Server would not require any new hardware, but using the existing system (memory, hard drive, NAS, or storage area network- SAN) as backup and storage in case of Data Center connection failure or latencies. Our Virtual Edge computing would be customized to help specific system or application. Machine Learning Tools would be also customized to help with the application decision making. Our answers to the same questions presented to Edge Computing Boxes: Looking at Image #3, regardless if Company A, or Company Z is running on its own network or hosted with by a service provider, all Company A or Z needs to do is to create the following for each critical application: • Virtual Edge Computing Server to run critical application customized Edge Computing support software • Virtual Server to run a dedicated Machine Learning Tools (support decision-makers) • Add more CPU-memory for creating these virtual servers - as needed • Add storage (NAS and/or bigger hard drive) if such storage is not including in the hosting - as needed For example, for an Oil Refinery company with a number of critical applications, each critical application needs its own specific handling support software. Therefore each critical application would require its own Virtual Edge Computing Server and its customized Machine Learning support tools (Decision-Maker). What we just mentioned is a customized handling of each critical application and not Edge Computing Box handling all these critical applications. Vertical and Horizontal Handler for Each Critical Application: Vertical Scaling Each critical application would be vertically scaled by adding more application support in its own Virtual Edge Computing Server. Horizontal Scaling: Each critical application has already its own Virtual Edge Computing Server plus the virtual server for Machine Learning support. CPU-Memory: Based on the existing hardware (bare-metal) and the required performance, both CPU processing power and core-Cache memory would be updated to handle the required performance, but not adding an Edge Computing Box with all the required connections, maintenance, software, security, ..etc. Storage: In the case of any critical application runs into latencies or any issues, both Virtual Edge Computing Server and Machine Learning supporting tools would be handling such issues plus they would be able store all critical data, objects, tracking audit trails, ..etc. As for rollback and recovery the hard drive and NAS would be used. The compressed-encrypted of data would be send to the cloud for further analysis and Machine Learning cases. Management Our Virtual Edge Computing Server would be automated and may not need any help or support for the existing management system. Distance Virtualization Intelligence and automation of our Virtual Edge Computing Server would eliminate issues including latencies, usage and loads, distance, security risk, performance. maintenance, etc. Capacity and Capacity Planning Our Virtual Edge Computing and Machine Learning servers need such support. Security Our Virtual Edge Computing and Machine Learning servers would add in securing the sunning system. Operations Our Virtual Edge Computing and Machine Learning servers need such support. Cost Almost none. 6. Machine Learning We are turning Machine Learning into a science and not a guess game of trials and errors using algorithms. How can we turn Machine Learning in to intelligent supportive decision-maker? Once we have data and we also have patterns related to the data based on the business case (Zeros-Ones), then computer can be trained to use the patterns to parse the data and find answers to problems, trends, correlations, frequencies, and so on. Again we are using Zeros-Ones of the business cases to build patterns - see my Machine Learning pages. Virtualization: To help with reducing the loads and the overhead on any running system, virtual system or servers can be created on the demand to help create the needed Machine Learning tools to run in the background as an intelligent supportive decision-maker. Virtual Edge Computing - onsite analysis and offsite learning: Instead of creating Edge Computing Boxes to handle the issues at hand, we recommend the creation of Virtual Edge Computing Servers or System with Machine Learning. Each Virtual Edge Computing Server-System would have to work with one and only one component. For example, in case of electric vehicles, one Machine Learning Virtual Server (we call it VML_ TPMS, see Intelligent Components section) would be created to hand Intelligent Tire Pressure Monitoring System (Intelligent TPMS) and works only with TPMS. The data collected would be would be parsed-analyzed by VML_TPMS plus data is compressed-encrypted and send to the cloud for further on site evaluation and learning. The new found issues and solutions would be a new update to the Machine Learning tools installed on the car computers. Intelligent Components (Supportive Intelligent Decision-Making): To define intelligent components, let us look at Intelligent Tire Pressure Monitoring System (Intelligent TPMS) Intelligent Tire Pressure Monitoring System (Intelligent TPMS): Intelligent Tire Pressure Monitoring System (Intelligent TPMS), which not only gauges the air pressure of tires, but also accurately identifies road conditions and tire motion across the road surface. Combined with sideslip prevention systems, is another advantage of the new system for the integration of tire pressure monitoring and sideslip prevention into a single system. Machine Learning and Intelligent Components: Looking at Intelligent Tire Pressure Monitoring System (Intelligent TPMS Image #4) and its features, we see that it has ability to integrate the tire pressure monitoring and sideslip. This means that it generates data where data can be used by Machine Learning tools to learn as data is collected. Therefore we can add the specifically needed Machine Learning tools to run in the background to help with the decision making of the Intelligent TPMS.

Image #4 Image # 4 is showing how Edge Computing can be implemented by creating a virtual server (Virtual Edge Computing Server) as a dedicated virtual system to help with monitoring and supporting any intelligent component. Virtual Edge Computing Server has two main components: • A dedicated Intelligent software handling the component's issue • A Machine Leaning Tools as a Decision-Maker Support Such a dedicated system would use the existing storage system on the bare-metal server to track, audit trail, store and backup and rollback as needed. The questions here would be: How many Virtual Edge Computing Server would be needed? The memory and bare-metal support needed? How many Virtual Edge Computing Server would be needed? We are using in our Case Study - Electric Vehicles - 2022 Tesla Model Y then one Virtual Edge Computing Server for each of the main car features. The memory and bare-metal support needed? With the assumption that each of the car feature has its own system (CPU, Cache, core, ..etc) and with the current technology used, most likely each system has between 8 GB of ram to 32 GB of ram. Therefore, doubling or tripling ram to 250 GB to create our Virtual Edge Computing Server is doable and not expensive. As for the storage (Hard Drive, NAS. SAN) is also is doable and not expensive. In conclusion, anytime data is generated, Machine Learning tools can be used to run in the background as a supportive intelligent decision-making. 7. Number of Our Edge Computing and Their Locations User or client computers or devices (users side) which would be running applications and the actual bare-metal servers (server side) located in the Data Center are only physical parts or hardware that exist and anyone can physically touch them. The rest have virtual existence. Users applications, OS, internet connections, security system and all the software running are the virtual part of the system. Therefore, our Virtual Edge Computing servers or containers are also virtual and can be created and run in any side which has virtual existence. Our Virtual Edge Computing servers or containers can be created on the user computer or on servers located in the Data Centers. Which side our Virtual Edge Computing servers or containers can be created are based on the best optimum options. For example, my personal laptop may have Virtual Edge Computing servers or containers running to perform the Virtual Edge Computing servers or containers tasks. With the same concept, Data Centers have Virtual Edge Computing server or containers created as temporary support in case any servers are temporary not working. How many Virtual Edge Computing servers or containers can be created are based on core size and hard disk capacity. 8. Added Security Layer Virtual Edge Computing servers or containers can be used as Cybersecurity buffers with security detection software. 9. Case Studies Case Study Definition: A case study is an in-depth study of one person, group, or event. In a case study, nearly every aspect of the subject's life and history is analyzed to seek patterns and causes of behavior. Case studies can be used in various fields, including psychology, medicine, education, anthropology, political science, and social work. We are presenting the following case studies as an illustration of benefits adding Machine Learning to Virtual Edge computing system. a. Electric Vehicles We are using 2022 Tesla Model Y as our electric vehicle Case Study. Electric Vehicles© b. Autonomous Vehicles Autonomous Vehicles© c. Oil Refinery Oil Refinery© |

|---|